Posts related to booting up computers, UEFI, classic BIOS, and loaders.

Running a colo / hosted server with Full Disk Encryption (FDE) requires logging in remotely during initramfs, to unlock LUKS. The usual setup tutorials run Dropbear on a different port, to prevent a host key mismatch between OpenSSH and Dropbear, and the scary MitM warning it implies.

However, it's much cleaner and nicer to share the same host key between Dropbear during boot-up and OpenSSH during regular operation.

This recipe shows how to convert the OpenSSH host keys into the Dropbear key

format for Debian's dropbear-initramfs.

Pre-2022 Dropbear

Until dropbear/#136 was fixed in 2022, OpenSSH host keys were not supported, and Ed25519 didn't fully work either.

Regardless of the key type, OpenSSH host keys begin with the following line:

# head -1 /etc/ssh/ssh_host_*_key

==> /etc/ssh/ssh_host_ecdsa_key <==

-----BEGIN OPENSSH PRIVATE KEY-----

==> /etc/ssh/ssh_host_ed25519_key <==

-----BEGIN OPENSSH PRIVATE KEY-----

==> /etc/ssh/ssh_host_rsa_key <==

-----BEGIN OPENSSH PRIVATE KEY-----

You had to convert them to the PEM format, as follows, inplace (DO A BACKUP FIRST!):

ssh-keygen -m PEM -p -f /etc/ssh/ssh_host_ecdsa_key

ssh-keygen -m PEM -p -f /etc/ssh/ssh_host_ed25519_key

ssh-keygen -m PEM -p -f /etc/ssh/ssh_host_rsa_key

The OpenSSH server will happily read PEM format as well, so there should be no problems after that:

# head -1 /etc/ssh/ssh_host_*_key

==> /etc/ssh/ssh_host_ecdsa_key <==

-----BEGIN EC PRIVATE KEY-----

==> /etc/ssh/ssh_host_ed25519_key <==

-----BEGIN OPENSSH PRIVATE KEY-----

==> /etc/ssh/ssh_host_rsa_key <==

-----BEGIN RSA PRIVATE KEY-----

Convert OpenSSH keys for Dropbear

The dropbear-initramfs package depends on dropbear-bin which comes with

the dropbearconvert tool that we need to convert from "openssh" to

"dropbear" key format. Old versions had it in

/usr/lib/dropbear/dropbearconvert but newer one have it in /bin/ - you

might have to update the path accordingly:

dropbearconvert openssh dropbear /etc/ssh/ssh_host_ecdsa_key /etc/dropbear-initramfs/dropbear_ecdsa_host_key

dropbearconvert openssh dropbear /etc/ssh/ssh_host_ed25519_key /etc/dropbear-initramfs/dropbear_ed25519_host_key

dropbearconvert openssh dropbear /etc/ssh/ssh_host_rsa_key /etc/dropbear-initramfs/dropbear_rsa_host_key

That's it. Run update-initramfs (/usr/share/initramfs-tools/hooks/dropbear

will collect the new host keys into the initramfs) and test after the reboot.

Exactly one year ago, after updating a bunch of Debian packages, my laptop stopped booting Linux. Instead, it briefly showed the GRUB banner, then rebooted into the BIOS setup. On every startup. Reproducibly. Last Friday 13th, I was bitten by this bug again, on a machine running Kali Linux, and had to spend an extra hour at work to fix it.

TL;DR: the GRUB config got extended with a call to fwsetup --is-supported. Older GRUB binaries don't know the parameter and will just reboot into the BIOS setup instead. Oops!

The analysis

Of course, I didn't know the root cause yet, and it took me two hours to isolate

the problem and some more time to identify the root cause. This post documents

the steps of the systematic analyis approach f*cking around and

finding out phase, in the hope that it might help future you and me.

Booting my Debian via UEFI or from the SSD's "legacy" boot sector reproducibly crashed into BIOS setup. Upgrading the BIOS didn't improve the situation.

Starting the Debian 12 recovery worked, however.

Manually typing the linux /boot/vmlinux-something root=UUID=long-hex-number

and initrd /boot/initrd-same-something and boot commands from the Debian 12

GRUB also brought me back into "my" Linux.

Running update-grub and grub-install from there, in order to fix my GRUB, had no positive effect.

The installed GRUB wasn't displaying anything, so I used the recovery to disable gfx mode in GRUB. It still crashed, but there was a brief flash of some text output. Reading it required a camera, as it disappeared after half a second:

bli.mod not found

A relevant error or a red herring? Googling it didn't yield anything back in 2023, but it was indeed another symptom of the same issue.

Another, probably much more significant finding was that merely loading my installation's grub.cfg from the Debian 12 installer's GRUB also crashed into the BIOS. So there was something wrong with the GRUB config after all.

Countless config changes and reboots later, the problem was bisected to the rather new "UEFI Firmware Settings" menu item. In retrospect, it's quite obvious that the enter setup menu will enter setup, except that... I wasn't selecting it.

But the config file ran fwsetup --is-supported in order to check whether to even display the new menu item. Quite sensible, isn't it?

Manually running fwsetup --is-supported from my installed GRUB or from the Debian installer... crashed into the BIOS setup! The obvious conclusion was that the new feature somehow had a bug or triggered a bug in the laptop's UEFI firmware.

But given that I was pretty late to the GRUB update, and I was running on a quite common Lenovo device, there should have been hundreds of users complaining about their Debian falling apart. And there were none. So it was something unique to my setup after all?

The code change

The "UEFI Firmware Settings" menu used to be unconditional on EFI systems. But then, somebody complained, and a small pre-check was added to grub_cmd_fwsetup() in the efifwsetup module in 2022:

if (argc >= 1 && grub_strcmp(args[0], "--is-supported") == 0)

return !efifwsetup_is_supported ();

If the argument is passed, the module will check for support and return 0 or 1. If it's not passed, the code will fall through to resetting the system into BIOS setup.

No further argument checks exist in the module.

Before this addition, there were no checks for module arguments. None at all.

Calling the pervious version of the module with --is-supported wouldn't check

for support. It wouldn't abort with an unsupported argument error. It would do

what the fwsetup call would do without arguments. It would reboot into the

BIOS setup. This is where I opened

Debian bug #1058818,

deleted the whole /etc/grub.d/30_uefi-firmware file and moved on.

The root cause

The Debian 12 installer quite obviously had the old version of the module. My laptop, for some weird (specific to me) reason, also had the old module.

The relevant file, /boot/grub/x86_64-efi/efifwsetup.mod is not part of any Debian package, but there exists another copy that's normally distributed as part of the grub-efi-amd64-bin package, and gets installed to /boot/grub/ by grub-install:

grub-efi-amd64-bin: /usr/lib/grub/x86_64-efi/efifwsetup.mod

My laptop had the file, but didn't have this package installed. This was caused by installing Debian, then restoring a full backup from the old laptop, which didn't use EFI yet, over the root filesystem.

The old system had the grub-pc package which satisfies the dependencies but only had the files to install GRUB into the [MBR] (https://en.wikipedia.org/wiki/Master_boot_record).

grub-install correctly identified the system as EFI, and copied the stale(!) modules from /usr/lib/grub/x86_64-efi/ to /boot/grub/. This had been working for two years, until Debian integrated the breaking change into the config and into the not installed grub-efi-amd64-bin package, and I upgraded GRUB2 from 2.04-1 to 2.12~rc1-12.

Simply installing grub-efi-amd64-bin properly resolved the issue for me, until

one year later.

The Kali machine

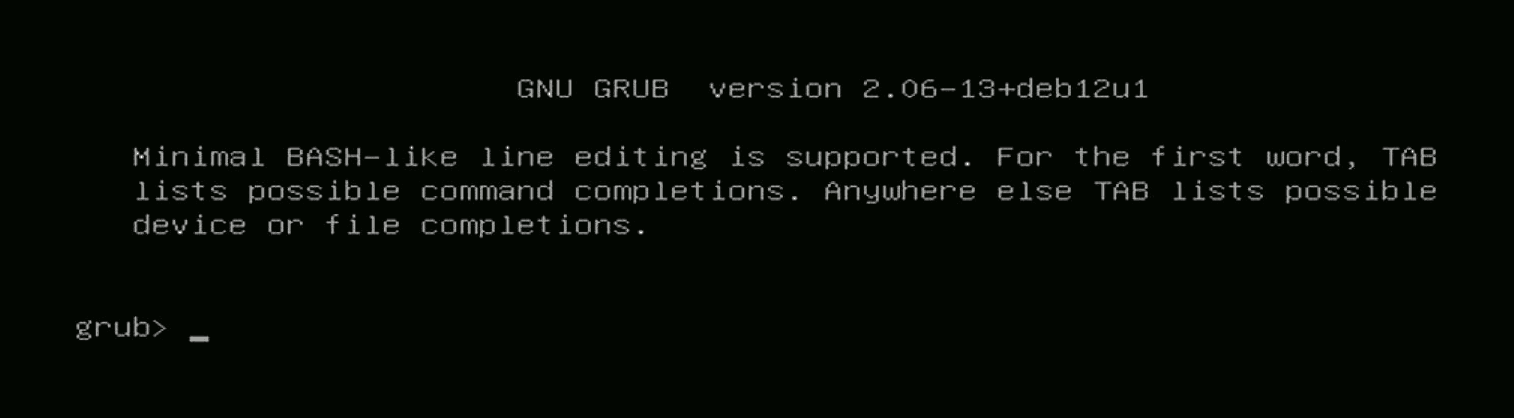

Last Friday (Friday the 13th), I was preparing a headless pentest box for a weekend run on a slow network, and it refused to boot up. After attaching a HDMI-to-USB grabber I was greeted with this unwelcoming screen:

Manually loading the grub.cfg restarted the box into UEFI setup. Now this is

something I know from last year! Let's kickstart recovery and check the GRUB2 install:

┌──(root㉿pentest-mobil)-[~]

└─# dpkg -l | grep grub

ii grub-common 2.12-5+kali1 amd64 GRand Unified Bootloader (common files)

ii grub-efi 2.12-5+kali1 amd64 GRand Unified Bootloader, version 2 (dummy package)

ii grub-efi-amd64 2.12-5+kali1 amd64 GRand Unified Bootloader, version 2 (EFI-AMD64 version)

ii grub-efi-amd64-bin 2.12-5+kali1 amd64 GRand Unified Bootloader, version 2 (EFI-AMD64 modules)

ii grub-efi-amd64-unsigned 2.12-5+kali1 amd64 GRand Unified Bootloader, version 2 (EFI-AMD64 images)

ii grub2-common 2.12-5+kali1 amd64 GRand Unified Bootloader (common files for version 2)

┌──(root㉿pentest-mobil)-[~]

└─# grub-install

Installing for x86_64-efi platform.

Installation finished. No error reported.

┌──(root㉿pentest-mobil)-[~]

└─#

That looks like it should be working. Why isn't it?

┌──(root㉿pentest-mobil)-[~]

└─# ls -al /boot/efi/EFI

total 16

drwx------ 4 root root 4096 Dec 13 17:11 .

drwx------ 3 root root 4096 Jan 1 1970 ..

drwx------ 2 root root 4096 Sep 12 2023 debian

drwx------ 2 root root 4096 Nov 4 12:53 kali

Oh no! This also used to be a Debian box before, but the rootfs got properly formatted when moving to Kali. The whole rootfs? Yes! But the EFI files are on a separate partition!

Apparently, the UEFI firmware is still starting the grubx64.efi file from

Debian, which comes with a grub.cfg that will bootstrap the config from

/boot/ and that... will run fwsetup --is-supported. BOOM!

Renaming the debian folder into something that comes after kali in the

alphabet finally allowed me to call it a day.

The conclusion

When adding a feature that is spread over multiple places, it is very important to consider the potential side-effects. Not only of what the new feature adds, but also what a partial change can cause. This is especially true for complex software like GRUB2, that comes with different targeted installation pathways and is spread over a bunch of packages.

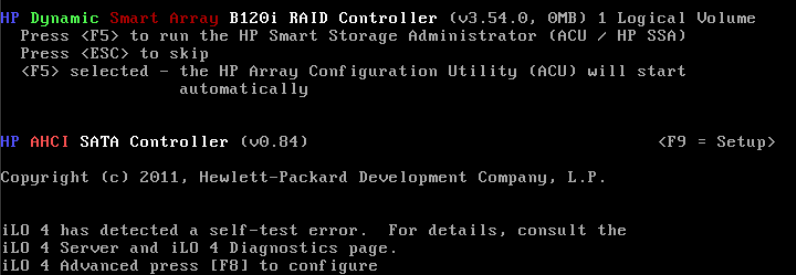

This post describes how to start "Intelligent Provisioning" or the "HP Smart Storage Administrator (ACU / SSA)" on a Gen8 server with a broken NAND, so that you can change the boot disk order. It has been successfully tested on the HPE MicroServer Gen8 as well as on a ProLiant ML310e Gen8, using either a USB drive or a µSD / SD card with at least 1GB of capacity.

Update 2021-05-17: to consistently boot from an SSD in port 5, switch to Legacy SATA mode. See below for details.

Changing the Boot Disk

HP Gen8 servers in AHCI mode will always try to boot from the first disk in the (non-)hot-swap drive bay, and completely ignore the other disks you have attached.

The absolutely non-obvious way to change the boot device, as outlined in a well-hidden comment on the HP forum, is:

- Change the SATA mode from "AHCI" to "RAID" in BIOS

- Ignore the nasty red and orange warning about losing all your data

- Boot into HP "Smart" Storage Administrator

- Create a single logical disk of type RAID0

- Add the desired boot device (and only it!) to the RAID0

- Profit!

The disks in the drive bay will become invisible as boot devices / to your GRUB, but they will keep working as before under your operating system, and there seems to be no negative impact on the boot device either.

This is great advice, provided that you are actually able to boot into SSA (by

pressing F5 at the right moment during your bootup process).

WARNING / Update 2020-10-07: apparently, booting from an SSD on the ODD port (SATA port 5) is not supported by HPE, so it is a pure coincidence that it is possible to set up, and your server will eventually forget the RAID configuration of the ODD port, falling back to whatever boot device is in the first non-hot-plug bay. This has happened to me on the ML310e, but not on the MicroServer (as reported in the forum) yet.

Update 2021-05-17: after another reboot-induced RAID config loss, I have done some more research and found this suggestion to switch to Legacy SATA mode. Another source in German. I have followed it:

- Reboot into BIOS Setup (press

F9), switch to Legacy SATA- System Options

- SATA Controller Options

- Embedded SATA Configuration

- SATA Legacy Support

- Embedded SATA Configuration

- SATA Controller Options

- System Options

- Reboot into BIOS Setup (press F9), switch boot controller Order

- Boot Controller Order

- Ctlr:2

- Boot Controller Order

- Optional 😉: shut down the box and swap the cables on ports 5 and 6.

- Profit!

My initial fear that the "Legacy" mode would cause a performance downgrade so far didn't materialize. The devices are still operated in the fastest SATA mode supported on the respective port, and NCQ seems to work as well.

The Error Message

However, for some time now, my HP MicroServer Gen8 has been showing one of those nasty NAND / Flash / SD-Card / whatever error messages:

- iLO Self-Test reports a problem with: Embedded Flash/SD-CARD. View details on Diagnostics page.

- Controller firmware revision 2.10.00 Partition Table Read Error: Could not partition embedded media device

- Embedded Flash/SD-CARD: Embedded media initialization failed due to media write-verify test failure.

- Embedded Flash/SD-CARD: Failed restart..

..or a variation thereof. I have ignored it because I thought it referred to the SD card and it didn't impact the server in noticeable ways.

At least not until I wanted to make the shiny new SSD that I bought the

default boot device for the server, which is when I realized that neither the

F5 key to run HP's "Smart" Storage Administrator tool, nor the F10 key

for the "Intelligent" Provisioning tool (do you notice a theme on their

naming?) had any effect on the boot process.

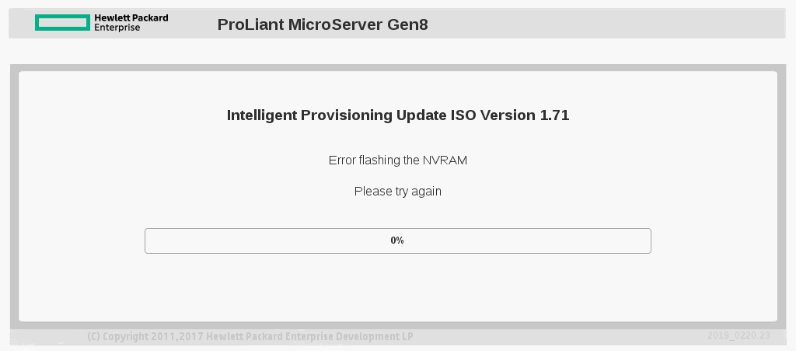

The "Official" Solution

The general advice from the Internet to "fix" this error is to repeat the following steps in random order, multiple times:

- Disconnect mains power for some minutes

- "Format Embedded Flash and reset iLO" from the iLO web interface

- "Reset iLO" from the iLO web interface

- Reset the CMOS settings from the F9 menu

- Reset the iLO settings via mainboard jumpers

- Downgrade iLO to 2.54

- Upgrade iLO to the latest version

- Send a custom XML via HPQLOCFG.exe

And once the error is fixed, to boot the Install Provisioning Recovery Media to put back the right data onto the NAND.

I've tried the various suggestions (except for the iLO downgrade, because the HTML5 console introduced in 2.70 is the only one not requiring arcane legacy browsers), but the error remained.

So I tried to install the provisioning recovery media nevertheless, but it failed with the anticipated "Error flashing the NVRAM":

(it will not boot the ISO if you just dd it to an USB flash drive, but you

can put it on a DVD or use the "Virtual Media" gimmick on a licensed iLO)

If none of the above "fixes" work, then your NAND chip is probably faulty indeed and thus the final advice given is:

- Contact HPE for a replacement motherboard

However, my MicroServer is out of warranty and I'm not keen on waiting for weeks or months for replacement and shelling out real money on top.

Booting directly into SSA / IP

But that fancy HPIP171.2019_0220.23.iso we downloaded to repair the

NAND surely contains what we need, in some heavily obfuscated form?

Let's mount it as a loopback device and find out!

# mount HPIP171.2019_0220.23.iso -o loop /media/cdrom/

# cd /media/cdrom/

# ls -al

total 65

drwxrwxrwx 1 root root 2048 Feb 21 2019 ./

drwxr-xr-x 5 root root 4096 Sep 11 18:41 ../

-rw-rw-rw- 1 root root 34541 Feb 21 2019 back.jpg

drwxrwxrwx 1 root root 2048 Feb 21 2019 boot/

-r--r--r-- 1 root root 2048 Feb 21 2019 boot.catalog

drwxrwxrwx 1 root root 2048 Feb 21 2019 efi/

-rw-rw-rw- 1 root root 2913 Feb 21 2019 font_15.fnt

-rw-rw-rw- 1 root root 3843 Feb 21 2019 font_18.fnt

drwxrwxrwx 1 root root 2048 Feb 21 2019 ip/

drwxrwxrwx 1 root root 2048 Feb 21 2019 pxe/

drwxrwxrwx 1 root root 6144 Feb 21 2019 system/

drwxrwxrwx 1 root root 2048 Feb 21 2019 usb/

# du -sm */

2 boot/

5 efi/

916 ip/

67 pxe/

30 system/

4 usb/

# ls -al ip/

total 937236

drwxrwxrwx 1 root root 2048 Feb 21 2019 ./

drwxrwxrwx 1 root root 2048 Feb 21 2019 ../

-rw-r-xr-x 1 root root 125913644 Feb 21 2019 bigvid.img.gz*

-rw-r-xr-x 1 root root 706750514 Feb 21 2019 gaius.img.gz*

-rw-r-xr-x 1 root root 114 Feb 21 2019 manifest.json*

-rw-rw-rw- 1 root root 140 Feb 21 2019 md5s.txt

-rw-rw-rw- 1 root root 164 Feb 21 2019 sha1sums.txt

-rw-r-xr-x 1 root root 127058868 Feb 21 2019 vid.img.gz*

# zcat ip/gaius.img.gz | file -

/dev/stdin: DOS/MBR boot sector

The ip directory contains the largest payload of that ISO, and all three

.img.gz files look like disk images, with exactly 256MB (vid), 512MB

(bigvid) and 1024MB (gaius) extracted sizes.

Following the "bigger is better" slogan, let's write the biggest one,

gaius.img.gz to an USB flash drive and see what happens!

# # replace /dev/sdc below with your flash drive device!

# zcat gaius.img.gz |dd of=/dev/sdc bs=1M status=progress

... wait a while ...

# reboot

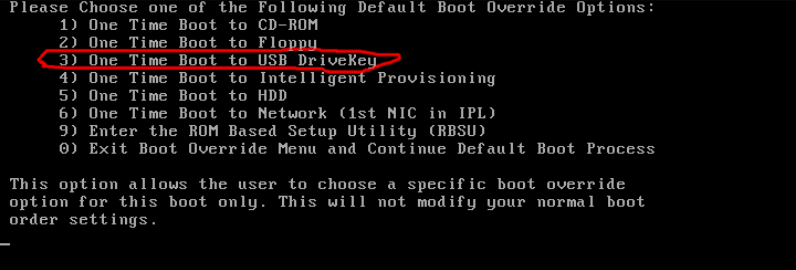

Then, on boot-up, select the "USB DriveKey" option:

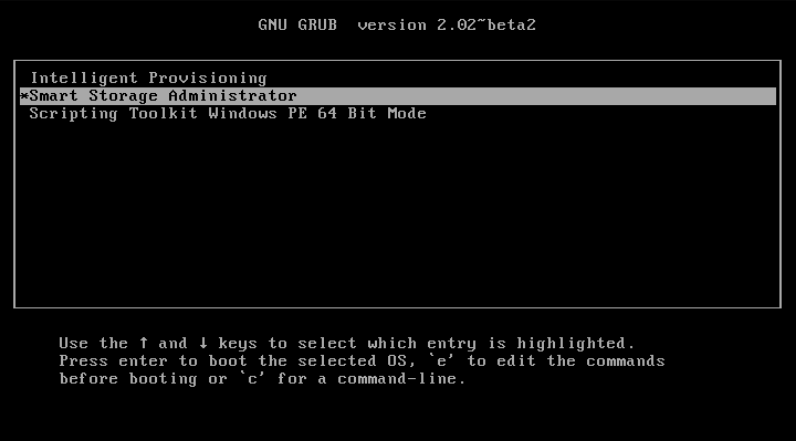

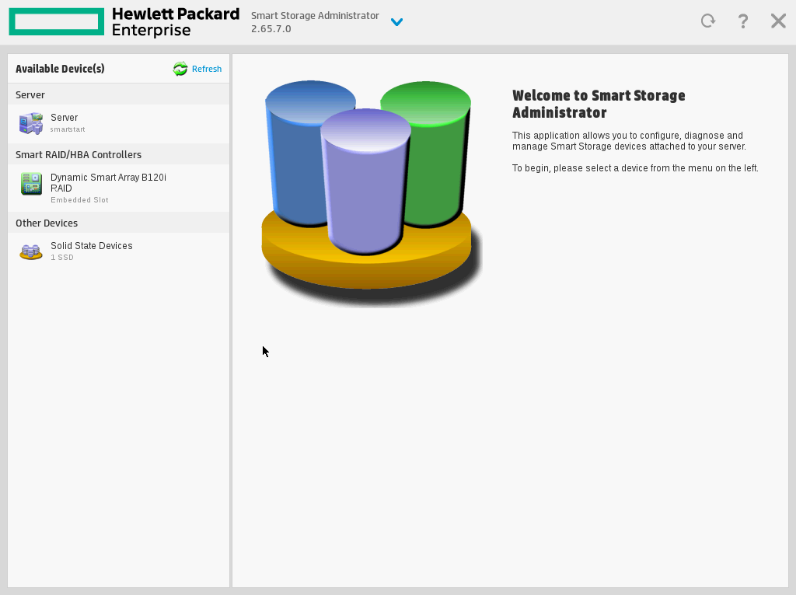

And you will be greeted by a friendly black & white GRUB loader, offering you "Intelligent" Provisioning and "Smart" Storage Administrator, which you can promptly and successfully boot:

From here, you can create a single logical volume of type RAID0, add just your boot disk into it, restart and be happy!