This is a follow-up to the Samsung NX mini (M7MU) firmware reverse-engineering series. This part is about the proprietary LZSS compression used for the code sections in the firmware of Samsung NX mini, NX3000/NX3300 and Galaxy K Zoom. The post is documenting the step-by-step discovery process, in order to show how an unknown compression algorithm can be analyzed. The discovery process was supported by Igor Skochinsky and Tedd Sterr, and by writing the ideas out on encode.su.

The initial goal was to understand just enough of the algorithm to extract and disassemble the ARM code for (de)compression. Unfortunately, this turned out as impossible when, after the algorithm was already mostly demystified, Igor identified it as Fujitsu's RELC (Rapid Embedded Lossless data Compression), an embedded hardware IP block on their ARM SoCs.

The TL;DR results of this research can be found in the project wiki: M7MU Compression.

Layer 1: the .bin file

Part 1 identified the .bin files that

can be analyzed, derived the container format and created an

extraction tool

for the section files within a firmware container.

The analysis in this post is based on the chunk-05.bin section file

extracted from the NX mini firmware version 1.10:

5301868 Apr 8 16:57 chunk-01.bin

1726853 Apr 8 16:57 chunk-02.bin

16 Apr 8 16:57 chunk-03.bin

400660 Apr 8 16:57 chunk-04.bin

4098518 Apr 8 16:57 chunk-05.bin

16 Apr 8 16:57 chunk-06.bin

16 Apr 8 16:57 chunk-07.bin

Layer 2: the sections

The seven section files are between 16 bytes and 5.2MB, the larger ones

definitively contain compressed data (strings output yields incomplete /

split results, especially on longer strings like copyright notices):

<chunk-01.bin>

Copyright (C) ^A^T^@^F, Arcsoft Inc<88>

<chunk-02.bin>

Copyright^@^@ (c) 2000-2010 b^@<95>y FotoNa^QT. ^@<87> ^B's^Qñ^A1erved.

<chunk-05.bin>

Copyright (c) 2<80>^@^@5-2011, Jouni Ma^@^@linen <*@**.**>

^@^@and contributors^@^B^@This program ^@^Kf^@^@ree software. Yo!

u ^@q dis^C4e it^AF/^@<9c>m^D^@odify^@^Q

under theA^@ P+ms of^B^MGNU Gene^A^@ral Pub^@<bc> License^D^E versPy 2.

The wpa_supplicant license inside chunk-05.bin indicates that it's the

network / browser / image upload code, which I need to understand in order to

fix

NX mini support in my Samsung API reimplementation.

Given how compression algorithms replace repeating patterns from the input with references, we can expect the data at the beginning of a compressed file to have fewer references and thus be easier to understand.

Ideally, we need to find some compressed data for which we know the precise uncompressed plain-text, in order to correlate which parts of the stream are literals and which parts are references.

The sections 1, 2, 4 and 5 all contain debug strings close to their respective beginning. This indicates that each section file is compressed individually, an important preliminary insight. However, some of the debug strings have spelling issues, and searching for them on the internet doesn't give us any plain-text reference:

-- Myri^@^@ad --makememlog.^@^@txt is not exist ^@

^@^@^AOpDebugMemIni ^@t ^@+roy alloc OK

^@^H^@´¢éAè@0A--[^CT] H^@^@EAP_ALLOC_TOTAL=^A^@%u

LZSS compression primer

The pattern visible in long strings, where the string is interrupted by two zero bytes after 16 characters, was quickly identified as a variant of LZSS by Igor. I hadn't encountered that before, and still had traumatic memories from variable bit-length Huffman encodings which I deemed impossible to analyze.

Luckily, LZSS operates on whole bytes and is rather straightforward to understand and to implement. The "Sliding window compression" section of Unpacking HP Firmware Updates does a decent job of explaining it on a practical example.

Each block in the compressed stream begins with a bitmask, identifying which of the following bytes are literals that need to be directly inserted into the output stream, and which ones are tokens, representing a lookup into the previously decompressed data. To limit the required memory use, the decompressed data is limited to a fixed size window of some kilobytes. Each token is a tuple of offset (how far back the referenced data is from the end of the window) and length (how many bytes to copy).

The number of bits used to encode the bitmask, the offset, and the length in the token vary with different implementations. More bits for the offset allow for a larger window with more reference data to choose from, more bits for the length allow to copy longer segments with a single token reference.

In the linked example, an 8-bit bitmask is used for each block, and the 16-bit

token is split into 12 bits for the offset, implying a 2^12 = 4096 byte

window, and 4 bits for length, allowing to reference up to 15+3=18 bytes.

As can be seen, the efficiency gains from only using two bytes are limited, so in some LZSS variants, a variable-length encoding is used in the token to allow even longer references.

Layer 4: the blocks

The best way to understand the parameters of the LZSS variant is to compare the compressed stream with the expected plain-text stream byte for byte. For this, we still need a good known plain-text reference. In fact, the garbled wpa_supplicant license that I found back in 2023 is excellent for that, as it's mostly static text from the wpa_supplicant.c source code:

"wpa_supplicant v" VERSION_STR="0.8.x" "\n"

"Copyright (c) 2003-2011, Jouni Malinen <j@w1.fi> and contributors"

"This program is free software. You can distribute it and/or modify it\n"

"under the terms of the GNU General Public License version 2.\n"

"\n"

"Alternatively, this software may be distributed under the terms of the\n"

"BSD license. See README and COPYING for more details.\n"

Looking at the file history in git, there are only two moving parts: the

VERSION_STR that encodes the respective

(pre-)release,

and the second year of the copyright. The NX mini firmware therefore contains

a "0.8.x" version from between

February 2011

and

January 2012.

We should look a few bytes ahead of the actual copyright string

"wpa_suppl..." (offset 0x003d1acb):

003d1ab0: 4749 4e00 6172 4852 334b 5933 0000 2066 GIN.arHR3KY3.. f

003d1ac0: 6f72 2054 6872 6561 6458 0077 7061 0200 or ThreadX.wpa..

003d1ad0: 5f73 7570 706c 48b2 6e74 2076 302e 382e _supplH.nt v0.8.

003d1ae0: 7800 000a 436f 7079 7269 6768 7420 2863 x...Copyright (c

003d1af0: 2920 3280 0000 352d 3230 3131 2c20 4a6f ) 2...5-2011, Jo

003d1b00: 756e 6920 4d61 0000 6c69 6e65 6e20 3c6a uni Ma..linen <j

003d1b10: 4077 312e 6669 3e20 0000 616e 6420 636f @w1.fi> ..and co

003d1b20: 6e74 7269 6275 746f 7273 0002 0054 6869 ntributors...Thi

003d1b30: 7320 7072 6f67 7261 6d20 000b 6600 0072 s program ..f..r

003d1b40: 6565 2073 6f66 7477 6172 652e 2059 6f21 ee software. Yo!

003d1b50: 0a75 2000 7120 6469 7303 3465 2069 7401 .u .q dis.4e it.

003d1b60: 462f 009c 6d04 006f 6469 6679 0011 0a75 F/..m..odify...u

003d1b70: 6e64 6572 2074 6865 4100 2050 2b6d 7320 nder theA. P+ms

003d1b80: 6f66 020d 474e 5520 4765 6e65 0100 7261 of..GNU Gene..ra

003d1b90: 6c20 5075 6200 bc20 4c69 6365 6e73 6504 l Pub.. License.

003d1ba0: 0520 7665 7273 5079 2032 2e0a 0a41 6c00 . versPy 2...Al.

003d1bb0: 366e 5089 0701 7665 6c79 2c00 3a00 8805 6nP...vely,.:...

003d1bc0: 8320 6d61 7920 6265 0781 0120 4664 2007 . may be... Fd .

003d1bd0: 6e0c 0a42 5344 206c 035f 2e20 5300 c730 n..BSD l._. S..0

003d1be0: fb44 2190 4d45 02f4 434f 5059 3013 0a53 .D!.ME..COPY0..S

003d1bf0: 6d6f 0057 6465 7461 044b 696c 732e 0a0f mo.Wdeta.Kils...

...

Assuming that the plain-text bytes in the compressed block are literals and the

other bytes are control bytes, we can do a first attempt at matching and

understanding the blocks and their bitmasks as follows, starting at what looks

like the beginning of a compression block at offset 0x3d1abc:

003d1abc: 00 00 = 0000.0000.0000.0000b // offset: hex and binary bitmask

003d1abe: literal 16 " for ThreadX\0wpa"

In the manually parsed input, "literal N" means there are N bytes matching the known license plain-text, and "insert N" means there are no literals matching the expected plain-text, and we need to insert N bytes from the lookup window.

The first block is straight-forward. 16 zero bits followed by 16 literal bytes

of NUL-terminated ASCII text. We can conclude that the bitmask has 16 bits,

and that a 0 bit stands for "literal".

The second block is slightly more complicated:

003d1ace: 02 00 = 0000.0010.0000.0000b

003d1ad0: literal 6 "_suppl"

003d1ad6: 48 b2 insert 3 "ica" (location yet unknown)

003d1ad8: literal 9 "nt v0.8.x"

The bitmask has six 0 bits, then a single 1 bit at position 7, then nine

more 0s, counting from most to least significant bit. There are two apparent

literal strings of 6 and 9 bytes, with two unknown bytes 0x48 0xb2 between

them that correspond to the three missing letters "ica". We can conclude that

the bitmask is big-endian, processed from MSB to LSB, the 1 in the bitmask

corresponds to the position of the token, and the token is encoded with two

bytes, which is typical for many LZSS variants.

003d1ae1: 00 00 = 0000.0000.0000.0000b

003d1ae3: literal 16 "\nCopyright (c) 2"

Another straight forward all literal block.

003d1af3: 80 00 = 1000.0000.0000.0000b

003d1af5: 00 35 insert 3 "003"

003d1af7: literal 15 "-2011, Jouni Ma"

The above block bitmask has the MSB set, meaning that it's directly followed

by a token. The "5" in the compressed stream ("5-2011") is a red herring and

not actually a part of the year. The copyright string reads "2003-2011" in all

revisions, it never had "2005" as the first year. Therefore, it must be part

of a token (0x00 0x35).

003d1b06: 00 00; literal 16 "linen <j@w1.fi> "

003d1b18: 00 00; literal 16 "and contributors"

Now this is getting boring, right?

003d1b2a: 00 02 = 0000.0000.0000.0010b

003d1b2c: literal 14 "\0 This program "

003d1b3a: 00 0b insert 3 "is "

003d1b3c: literal 1 "f"

Without looking at the token encoding, we now have identified the block structure and the bitmask format with a high confidence.

Decoding just the literals, and replacing tokens with three-byte "*" placeholders, we get the following output:

00000000: 2066 6f72 2054 6872 6561 6458 0077 7061 for ThreadX.wpa

00000010: 5f73 7570 706c **** **6e 7420 7630 2e38 _suppl***nt v0.8

00000020: 2e78 0a43 6f70 7972 6967 6874 2028 6329 .x.Copyright (c)

00000030: 2032 **** **2d 3230 3131 2c20 4a6f 756e 2***-2011, Joun

00000040: 6920 4d61 6c69 6e65 6e20 3c6a 4077 312e i Malinen <j@w1.

00000050: 6669 3e20 616e 6420 636f 6e74 7269 6275 fi> and contribu

00000060: 746f 7273 0054 6869 7320 7072 6f67 7261 tors.This progra

00000070: 6d20 **** **66 7265 6520 736f 6674 7761 m ***free softwa

Layer 5: the tokens

Now we need to understand how the tokens are encoded, in order to implement

the window lookups. The last block is actually a great example to work from:

the token value is 00 0b and we need to insert the 3 characters "is " (the

third character is a whitespace). 0x0b == 11 and the referenced string is

actually contained in the previously inserted literal, beginning 11 characters

from the end:

003d1b2c: literal 14 "\0 This program "

☝️☝️8←6←4←2←0

003d1b3a: 00 0b insert 3 "is " (offset -11)

003d1b3c: literal 1 "f"

Two-byte tokens are typical for many LZSS variants. To be more efficient than literals, a token must represent at least three bytes of data. Therefore, the minimum reference length is 3, allowing the compressor to subtract 3 when encoding the length value - and requiring to add 3 when decompressing.

From the above token, we can conclude that the offset is probably stored in

the second byte. The minimum reference length is 3, which is encoded as 0x0,

so we need to look at a different example to learn more about the length

encoding. All the tokens we decoded so far had a length of 3, so we need to

move forward to the next two blocks:

003d1b3d: 00 00 = 0000.0000.0000.0000b

003d1b3f: literal 16 "ree software. Yo"

003d1b4f: 21 0a = 0010.0001.0000.1010b

003d1b51: literal 2 "u "

003d1b53: 00 71 insert 3 "can"

003d1b55: literal 4 " dis"

003d1b59: 03 34 insert 6 "tribut" (offset -52?)

003d1b5b: literal 4 "e it"

003d1b5f: 01 46 insert 4 " and" (offset -70?)

003d1b61: literal 1 "/"

003d1b62: 00 9c insert 3 "or "

003d1b64: literal 1 "m"

The last block gives us two tokens with lengths of 6 (to be encoded as 0x3)

and 4 (0x1). These values match the first byte of the respective

token. However, using the whole byte for the length would limit the window

size to meager 256 bytes, an improbable trade-off. We should look for a token

with a known short length and as many bits in the first byte set as possible.

We had such a token in the beginning actually:

003d1ad6: 48 b2 insert 3 "ica" (location yet unknown)

We already know that the length is encoded in the lower bits of the first

byte, with length=3 encoded as 0x0. In 0x48 = 0100.1000b, we only get

three zero bits at the bottom of the byte, limiting the length to 7+3 = 10,

which is another improbable trade-off.

That also implies that the upper five bits, together with the second byte,

form a 13-bit offset into a 2^13=8192 byte window. By removing the length

bits from the first byte, 0x48b2 becomes the offset 0x09b2 = 2482.

hi, lo = f.read(2)

count = 3 + (hi & 0x07)

offset = ((hi >> 3) << 8) + lo

We apply the window lookup algorithm to our compressed stream, and arrive at the following uncompressed plain-text:

00000000: 2066 6f72 2054 6872 6561 6458 0077 7061 for ThreadX.wpa

00000010: 5f73 7570 706c **** **6e 7420 7630 2e38 _suppl***nt v0.8

00000020: 2e78 0a43 6f70 7972 6967 6874 2028 6329 .x.Copyright (c)

00000030: 2032 **** **2d 3230 3131 2c20 4a6f 756e 2***-2011, Joun

00000040: 6920 4d61 6c69 6e65 6e20 3c6a 4077 312e i Malinen <j@w1.

00000050: 6669 3e20 616e 6420 636f 6e74 7269 6275 fi> and contribu

00000060: 746f 7273 0054 6869 7320 7072 6f67 7261 tors.This progra

00000070: 6d20 6973 2066 7265 6520 736f 6674 7761 m is free softwa

00000080: 7265 2e20 596f 7520 **** 6e20 6469 7374 re. You **n dist

00000090: 7269 6275 7465 2069 7420 616e 642f 6f72 ribute it and/or

000000a0: 206d 6f64 6966 7920 6974 0a75 6e64 6572 modify it.under

000000b0: 2074 6865 20** **** 6d73 206f 6620 7468 the ***ms of th

000000c0: 6520 474e 5520 4765 6e65 7261 6c20 5075 e GNU General Pu

000000d0: 626c **** 204c 6963 656e 7365 2076 6572 bl** License ver

000000e0: 73** **** 2032 2e0a 0a41 6c** **** 6e** s*** 2...Al***n.

000000f0: **** 7665 6c79 2c20 7468 6973 2073 6f66 **vely, this sof

00000100: 7477 6172 6520 6d61 7920 6265 2064 6973 tware may be dis

00000110: 7472 6962 7574 4664 2007 6e0c 0a** **** tributFd .n..***

00000120: **** 4420 **** **** **** **5f 2e20 5300 **D *******_. S.

As we started the decompression in the middle of nowhere, the window isn't properly populated, and thus there are still streaks of "*" for missing data.

However, there is also a mismatch between the decompressed and the expected plain-text in the last two lines, which cannot be explained by missing data in the window:

00000110: 7472 6962 7574 4664 2007 6e0c 0a** **** tributFd .n..***

00000120: **** 4420 **** **** **** **5f 2e20 5300 **D *******_. S.

What is happening there? We need to manually look at the last two blocks to see what goes wrong:

003d1bb4: 07 01 = 0000'0111'0000'0001b

003d1bb6: literal 5 "vely,"

003d1bbb: 00 3a insert 3 " th" (offset -58)

003d1bbd: 00 88 insert 3 "is " (offset -136)

003d1bbf: 05 83 insert 8 "software" (offset -131)

003d1bc1: literal 7 " may be"

003d1bc8: 07 81 insert 10 " distribut" (offset -129)

003d1bca: 01 20 = 0000'0001'0010'0000b

003d1bcc: literal 7 "Fd \0x07n\x0c\n"

Quite obviously, the referenced "distribut" at 0x3d1bc8 is correct, but after

it comes garbage. Incidentally, this token is the first instance where the

encoded length is 0x7, the maximum value to fit into our three bits.

Variable length tokens

The lookup window at offset -129 contains "distribute it", and the plain-text that we need to emit is "distributed".

We could insert "distribute" (11 characters instead of 10) from the window,

and we can see a literal "d" in the compressed data at 0x3d1bcd that would

complement "distribute" to get the expected output. Between the token and the

literal we have three bytes: 0x01 0x20 0x46.

What if the first of them is actually a variable-length extension of the token? The maximum lookup of 10 characters noted earlier is not very efficient, but it doesn't make sense to make all tokens longer. Using a variable-length encoding for the lookup length makes sense (the window size is fixed and only ever needs 13 bits).

Given that we need to get from 10 to 11, and the next input byte is 0x01,

let's assume that we can simply add it to the count:

hi, lo = f.read(2)

count = 3 + (hi & 0x07)

offset = ((hi >> 3) << 8) + lo

if count == 10:

# read variable-length count byte

count += f.read(1)[0]

With the change applied, our decoding changes as follows:

...

003d1bc8: 07 81 01 insert 11 " distribut" (offset -129)

003d1bcb: 20 46 = 0010'0000'0100'0110b

003d1bcd: literal 2 "d "

003d1bcf: 07 6e 0c insert 22 "under the terms of the" (offset -110)

003d1bd2: literal 6 "\nBSD l"

003d1bd8: 03 4f insert 6 "icense" (offset -95)

003d1bda: literal 3 ". S"

003d1bdd: 00 c7 insert "ee " (offset -199)

003d1bdf: 30 fb insert "***" (offset -1787)

003d1be1: literal 1 "D"

This actually looks pretty good! We have another three-byte token in the next

block at 0x3d1bcf, with a lookup length of 22 bytes (3 + 0x7 + 0x0c) that

confirms our assumption. The uncompressed output got re-synchronized as well:

00000110: 7472 6962 7574 6564 2075 6e64 6572 2074 tributed under t

00000120: 6865 20** **** 6d73 206f 6620 7468 650a he ***ms of the.

00000130: 4253 4420 6c69 6365 6e73 652e 2053 6565 BSD license. See

00000140: 20** **** 444d 4520 616e 6420 434f 5059 ***DME and COPY

How long is the length? YES!

With the above, the common case is covered. Longer lengths (>265) are rare in the input and hard to find in a hex editor. Now it makes sense to instrument the tooling to deliberately explode on yet unidentified corner cases.

There exist different variable-length encodings. The most-widely-known one is probably UTF-8, but it's rather complex and not well-suited for numbers. The continuation bit encoding in MIDI is a straight-forward and sufficiently efficient one. The trivially blunt approach would be to keep adding bytes until we encounter one that's less than 255.

In the case of MIDI, we need to check for cases of count > 138, in the

latter for count > 265.

if count == 10:

# read variable-length count byte

count += f.read(1)[0]

if count > (10 + 128):

print(f"{f.tell():08x}: BOOM! count > 138!")

count = count - 128 + f.read(1)[0]

Running the code gives us a canary at an offset to manually inspect:

003d2e53: BOOM! count > 138!

003d2e51: ef 18 c0 insert 172 "d\x00ca_cert\x00ca_path\x00cut nt_cert\x00 d\x01"

"vlai_key_us_\x01dd\x00dh_f\x01\x01\x01\x01subjec"

"t_ollch\x00altsubject_ollch\x00ty\x00pass2word\x"

"00ca_2cert\x00ca_path2\x00 d\x01vlai_keyrt\x00 d"

"\x01vlai_key_2us_\x01dd\x00dh_f\x01\x01\x012\x01"

"subject_ol" (offset -7448)

Now this does not look like a very nice place to manually check whether the data is valid. Therefore, it makes sense to run different variants of the decoder on the complete input and to compare and sanity-check the results.

Keeping assertion checks of the assumed stream format allows to play around with different variants, to see how far the decoder can get and to find incorrect reverse-engineering assumptions. This is where the FAFO method should be applied extensively.

Applying the FAFO method (after figuring out the subsection format outlined later in this post) led to the realization that the variable-length length encoding is actually using the brute-force addition approach:

hi, lo = f.read(2)

count = 3 + (hi & 0x07)

offset = ((hi >> 3) << 8) + lo

if count == 10:

more_count = 255

while more_count == 255:

more_count = f.read(1)[0]

count += more_count

Well, surely this can't be too bad, how long is the longest streak of repeating data in the worst case?

Well. chunk-01.bin wins the length contest:

0046c204: literal b'\x00'

0046c205: token 0x0701ffffffffffffffffffffffffffffffffff56: 4431 at -1

<snip>

0046c21d: bitmask 0xc0000

0046c21f: token 0x0701ffffffffffffffffffffffffffffffffffffffffffffffffff \

ffffffffffffffffffffffffffffffffffffffffffffffffffffff \

ffffffffffffffffffffffffffffffffffffffffffffffffffffff \

ffffffffffffffffffffffffffffffffff56: 24576 at -1

0046c282: token 0x0000: 3 at -0

Oof! Samsung is using 97 length extension bytes to encode a lookup length of 24576 bytes. This is still a compression ratio of ~250x, so there is probably no need to worry.

By the way, offset=-1 essentially means "repeat the last byte from the

window this many times". And offset=0 (or rather token=0x0000) is some

special case that we have not encountered yet.

Back to layer 3: the subsections

So far, we started decoding at a known block start position close to the identified plain-text. Now it's time to try decompressing the whole file.

When applying the decompression code right at the beginning of one of the compressed files, it falls apart pretty quickly (triggering an "accessing beyond the populated window" assertion):

$ m7mu-decompress.py -d -O0 chunk-01.bin

00000000 (0): bitmask 0x623f=0110'0010'0011'1111b

literal b'\x00'

token 0x0070=0000'0000'0111'0000b: 3 at -112

IndexError: 00000000: trying to look back 112 bytes in a 1-sized window

$ m7mu-decompress.py -d -O1 chunk-01.bin

00000001 (0): bitmask 0x3f00=0011'1111'0000'0000b

literal b'\x00p'

token 0x477047=0100'0111'0111'0000'0100'0111b: 81 at -2160

IndexError: 00000001: trying to look back 2160 bytes in a 2-sized window

$ m7mu-decompress.py -d -O2 chunk-01.bin

00000002 (0): bitmask 0x0000=0000'0000'0000'0000b

literal b'pGpG\x01F\x81\xea\x81\x00pG8\xb5\xfaH'

00000014 (1): bitmask 0x0000=0000'0000'0000'0000b

literal b'\x00%iF\x05`\xf9H@h\xda\xf5|\xd9\x10\xb1'

00000026 (2): bitmask 0x0000=0000'0000'0000'0000b

literal b'\xf7\xa0\xe7\xf5\x11\xdc\x00\x98\xb0\xb1\xf4L\x0c4%`'

<snip very long output>

This leads to the assumption that the first two bytes, 0x62 0x3f for

chunk-05.bin, are actually not valid compression data, but a header or count

or size of some sort.

Given that it's a 16-bit value, it can't be the size of the whole file, but could indicate the number of compression blocks, the size of the compressed data to read or the size of the uncompressed data to write.

These hypotheses were evaluated one by one, and led to the discovery of an

anomaly: the compressed file had 0x00 0x00 at offset 0x623f (at the same

time identifying the number as big-endian):

╭──

00006230: 07 30 0907 cc13 6465 6c65 0760 2204 30│00 .0....dele.`".0.

──╮ ╰──

00006240: 00│48 2589 c407 6025 416c 6c06 1c6c 73 07 .H%...`%All..ls.

──╯

This number is followed by 0x4825, and there is another pair of zeroes at

0x623f + 2 + 0x4825 = 0xaa66:

╭────╮

0000aa60: 2b60 15fe 0114│0000│4199 4010 6500 1353 +`......A.@.e..S

╰────╯

Apparently the 0x0000 identifies the end of a subsection, and the following

word is the beginning of the next section, indicating its respective length in

bytes.

I initially assumed that 0x0000 is a special marker between subsections,

and I needed to manually count bytes and abort the decompression before

reaching it, but it turns out that it actually is just the final 0x0000

token that tells the decompressor to finish.

Subsection sizes after decompression

The subsection sizes appear to be rather random, but Igor pointed out a pattern in the decompressed sizes, at a time when the file didn't decompress cleanly yet, and we were struggling for new ideas:

BTW, it seems the first compressed section decompresses to exactly 0x8000 bytes

That made me check out the decompressed sizes for all subsections of

chunk-05 and to find a deeper meaning:

*** wrote 32768 bytes

*** wrote 24576 bytes

*** wrote 24576 bytes

...

*** wrote 24576 bytes

*** wrote 24462 bytes 👈🤯

*** wrote 24576 bytes

...

The first one was clearly special, most of the following subsections were 24576 bytes, and some were slightly different. A deeper look into the 24462-byte-section led to the realization that the previous approach at counting variable-length lengths was not correct, and that it is indeed "add bytes until !=255".

Plain-text subsections

That made all the subsections until offset 0x316152 decompress cleanly,

giving nice equally-sized 24576-byte outputs.

However, the subsection size at offset 0x316152 was 0xe000, a rather

strange value. I had a suspicion that the MSB in the subsection size was

actually a compression flag, and 0xe000 & 0x7fff = 24576 - the typical

uncompressed subsection size!

The suspicion came from looking at chunk-03.bin, which begins with a

subsection of 0x800c, followed by 14 bytes, of which most are zero:

00000000: 800c 7047 0000 0300 0000 0000 0000 0000 ..pG............

That could mean "uncompressed subsection", size 0x0c = 12, followed by

an 0x0000 end-of-file marker.

By applying FAFO it was determined that the uncompressed subsections also need to be fed into the lookup window, and the decoder behaved excellently:

$ m7mu-decompress.py -d -O0 chunk-01.bin |grep "\* wrote " |uniq -c

1 *** wrote 32768 bytes

253 *** wrote 24576 bytes

1 *** wrote 17340 bytes

This allowed to decompress the firmware for the NX cameras, but there is still

one failure case with chunk-04.log of the RS_M7MU.bin file. The decoder

bails out after writing:

*** 00000000 Copying uncompressed section of 0 bytes ***

That's not quite right. The first subsection begins with 0x8000 which,

according to our understanding, should either mean "0 uncompressed bytes" or

"32768 compressed bytes". Neither feels quite right. Given that the

(compressed) first subsection in all other files decompresses to 32768 bytes,

this is probably a special case of "32768 uncompressed bytes", which needs to

be added to the decoder:

subsection_len = struct.unpack(">H", f.read(2))[0]

if subsection_len == 0x0000:

return # reached end-of-section marker

elif subsection_len >= 0x8000:

# uncompressed subsection

uncompressed_copy_len = (subsection_len & 0x7fff)

if subsection_len == 0x8000:

# work around for RS_M7MU chunk-04

uncompressed_copy_len = 0x8000

This was the last missing piece to decompress all firmware files! Having those, Igor identified the compression algorithm as the very obscure Fujitsu RELC.

Fujitsu RELC history

There are still a few details available about Fujitsu RELC, mostly in Japanese. There is a 2014 Arcmanager software library pamphlet, mentioning both ESLC (Embedded Super Lossless Compression) and RELC (Rapid Embedded Lossless data Compression):

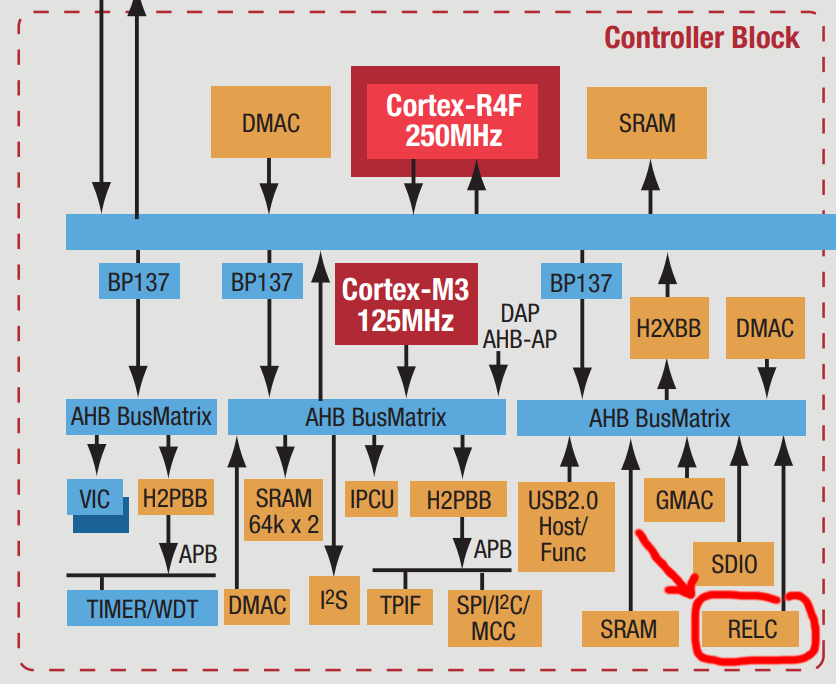

Furthermore, their 2011 ARM Cortex Design Support factsheet outlines a RELC hardware block connected via AHB:

The Arcmanager portal gives some more insights:

- the (Windows) Arcmanager was introduced in 2002 and last updated in 2022.

- there is a sample self-extracting EXE archive with some example Visual Basic / Visual C code

- the algorithm was first published by Noriko Itani and Shigeru Yoshida in an October 2004 programming magazine as SLC/ELC

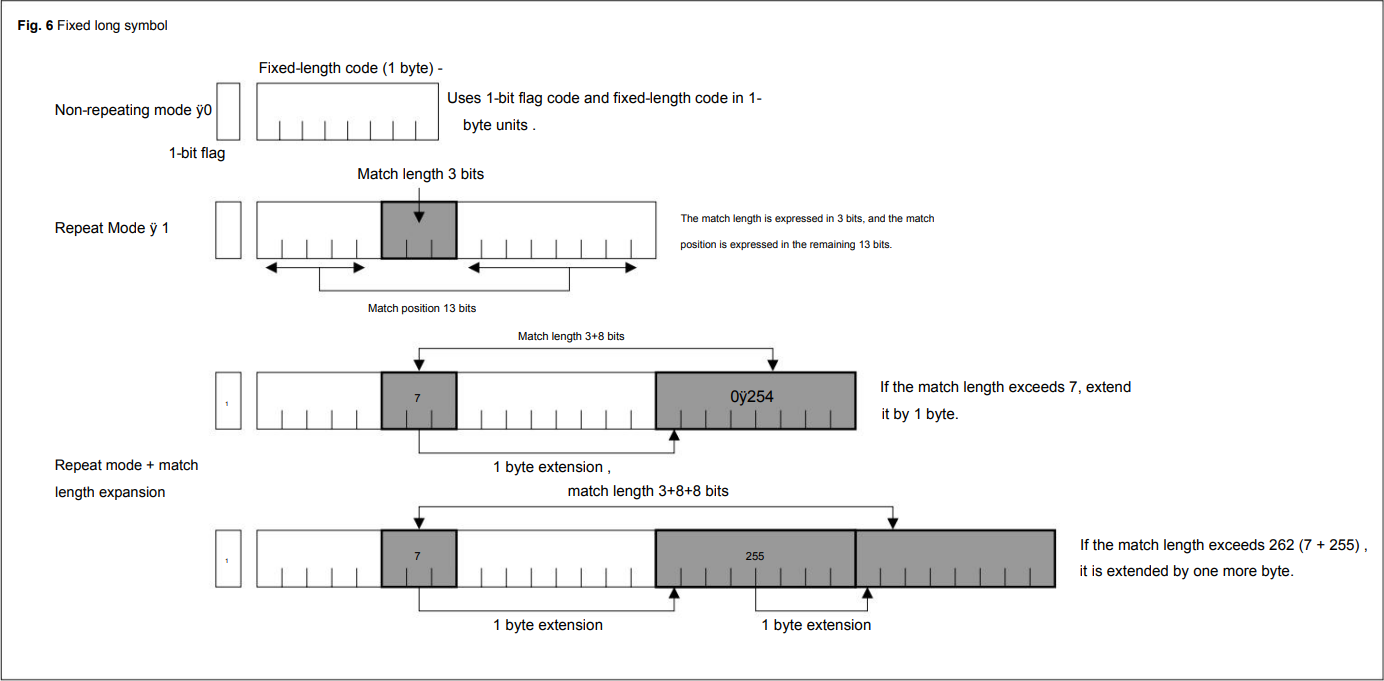

The original submission already makes use of 13-bit offsets and 3+N*8 bit offset encodings:

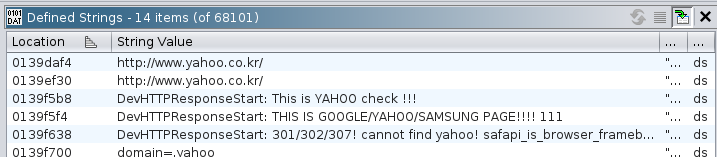

Unfortunately, this knowledge was only unlocked after understanding the algorithm solely by analyzing the Samsung firmware files. However, the use of a RELC hardware block can be confirmed from the RELC-related strings in the firmware files:

# Set RELC AHB Bus Clock Stop in CLKSTOP_B register

USAGE>top clock set_reg clkstop_b RELCAH value

USAGE>relc set [inbuf/inbufm/refbuf] [in_addr] [in_size] [out_addr

# Set and Start RELC normal mode.(Async type)

# Set and Start RELC descriptor mode.(Async type)

# Set and Start RELC normal mode.(Sync type)

# Set and Start RELC descriptor mode.(Sync type)

Summary

This rather long and winding story goes through different layers of the NX mini, NX3000/NX3300 and Galaxy K-Zoom firmware firmware files, analyzing and understanding each layer, and arriving at the discovery of a hardware accelerator embedded into the Fujitsu CPUs powering the Samsung cameras.

The ultimate file structure is as follows:

- a 1024-byte M7MU header pointing at the following sections:

- a "writer" (320KB ARM binary, probably responsible for flashing the firmware from SD)

- seven (un)compressed section files (

chunk-0x.bin), each with the following format:- a sequence of subsections, each starting with a compression flag bit and a 15-bit length, with the compressed subsections containing an RELC/LZSS compressed stream with two-byte bitmasks and two-byte/variable-length tokens, making use of an 8KB window

- a

0x0000end-of-section marker

- one rather large

SF_RESOURCEfilesystem with additional content

The firmware file dumper to extract the writer, the chunks and the

SF_RESOURCE was described in the

M7MU Firmware File Format

post and can be downloaded from

m7mu.py.

The final version of the decompressor, implementing all of the above discoveries, can be found in the project repository as m7mu-decompress.py.

Now this can be used for understanding the actual code running on these cameras. Stay tuned for future posts!

This post documents how to convert a directory full of project release ZIP files into a git history with better diffs and commit dates. It was an itch I needed to scratch and there is no reason to keep the hacky tooling to myself.

The NX-KS project

I'm an active user of the NX-KS2 mod on my 2014 Samsung NX500 camera. The mod implements additional functionality and offers an UI overlay to access it. Under the hood, it's shell scripts and small UI helpers. The original developer KinoSeed vanished around 2017, and all official download locations are gone.

I've been carrying a few minor modifications in my $HOME and on my cameras

since installing the mod, and I always wanted to properly track them in git.

However, there was no original repository, and just using the last release

2.88 as the initial commit felt wrong to me.

Luckily, somebody

collected the known releases and

even added a

README.html with a change log of sorts.

I want to convert that set of files into a git history now.

Preparing the inputs

I've downloaded all the ZIP files from NX-KS-mod/ and NX-KS-mod/archive/

into one common directory. To make the file names consistent, I had to rename

the v2 files:

rename s/NX-KS2-/NX-KS-2./ nx-ks-mod-input/NX-KS2*

The change log in README.html contains the commit id of the ZIP file in

the upstream project (yes, don't ask me!), the version string, a changelog,

and a date.

I manually converted the README into tab-separated values as follows:

$version |

$changes |

$date |

|---|---|---|

| 2.88 | TODO | 2017-02-02 |

| 2.07 | TODO | 2016-05-24 |

| 2.00m-repack | prefman saves to eMMC only | 2016-05-16 |

| 1.82 | Zoomzoom | 2016-05-27 |

| 1.81 | Ramping and Wake-lapse NX1 compatible | 2016-05-22 |

| 1.80 | better balanced priority, tbbaf removed | 2016-05-22 |

| ... | ... | ... |

The full metadata table was saved to nx-ks-mod-changes.tsv.

The changes in 2.88 and 2.07 were undocumented, so I left them as "TODO" for

the first iteration, checked the git diff after it, and improved the

changelog on the second conversion re-run.

The 2.00m-repack version was based on the ZIP file name, I decided to keep

it as-is in the commit history, but to manually change the git tag to

v2.00m.

Converting the README

The project is providing a NX-KS_readme.odt (renamed to NX-KS2_readme.odt

in the 2.x versions), which contains a description and sometimes can indicate

changes. However, ODT is not a good format to git-diff (even though

there are workarounds).

As a hack, I'm using Pandoc to create a markdown

approximation of the original document:

[ -f "NX-KS_readme.odt" ] && pandoc NX-KS_readme.odt -o NX-KS_readme.md

[ -f "NX-KS2_readme.odt" ] && pandoc NX-KS2_readme.odt -o NX-KS_readme.md

Unfortunately, the markup in the ODT is not using proper styles, so the result is far from perfect.

Creating the repository

We need to create an empty git repository first:

mkdir nx-ks-mod

cd nx-ks-mod

git init .

Committing the ZIP files

Then, for each ZIP file we have to perform the following steps:

Remove all previous content (in case a file was deleted):

git rm -r .Unpack the ZIP file (we are using find because we do not have a full file-name in our table):

fn=$(find ../nx-ks-mod-input/ -name *$version.zip) unzip "$fn"Pandoc the README (see above)

Commit everything, back-dating to

$dateand cheating the file name into the committer id (it could be added into the commit message as well), then tagging it with the version from the table:git add . git commit --date="$date 00:00:00" \ --author "$(basename $fn) <$email>" \ -m "$changes" git tag "v$version"

The final script

There is one more thing. The order in the README was newest-first, but for a proper commit history, we need to reverse the order, which we do using tac.

The complete result is this:

#!/bin/bash

mkdir nx-ks-mod && (cd nx-ks-mod ; git init .)

tac nx-ks-mod-changes.tsv | \

while IFS=$'\t' read version changes date ; do

echo "-- $date -- $version -- $changes --"

echo ""

fn=$(find nx-ks-mod-input/ -name *$version.zip)

cd nx-ks-mod

git rm -r .

unzip "../$fn"

[ -f "NX-KS_readme.odt" ] && pandoc NX-KS_readme.odt -o NX-KS_readme.md

[ -f "NX-KS2_readme.odt" ] && pandoc NX-KS2_readme.odt -o NX-KS_readme.md

git add .

git commit --date="$date 00:00:00" --author "$(basename $fn) <georg@op-co.de>" -m "$changes"

git tag "v$version"

cd ..

echo

done

Not perfect, but good enough to create a commit history, as can be seen on github/nx-ks-mod.

In 2014 and 2015, Samsung released the NX mini and NX3000/NX3300 cameras as part of their mirrorless camera line-up. My 2023 archaeological expedition showed that they use the Fujitsu M7MU SoC, which also powers the camera in the dual-SoC Exynos+M7MU Galaxy K-Zoom. This blog post performs a detailed step-by-step reverse engineering of the firmware file format. It is followed by reverse-engineering the LZSS compression, in order to obtain the raw firmware image for actual code analysis.

The TL;DR results of this research can be found in the project wiki: M7MU Firmware Format / SF_RESOURCE.

Prelude

Two years ago I did a heritage analysis of all NX models and found some details about the history of the Milbeaut MB86S22A SoC powering the above models. The few known details can be read up in that post.

Copyright (c) 2<80>^@^@5-2011, Jouni Ma^@^@linen <*@**.**>

^@^@and contributors^@^B^@This program ^@^Kf^@^@ree software. Yo!

u ^@q dis^C4e it^AF/^@<9c>m^D^@odify^@^Q

under theA^@ P+ms of^B^MGNU Gene^A^@ral Pub^@<bc> License^D^E versPy 2.

The firmware files are using some sort of compression that neither I nor

binwalk knew about, so the further analysis was stalled. Until April 2025.

Nina wrote a

fascinating thread about the TRON operating system,

I chimed in with a

shameless plug of my own niche knowledge of µITRON on Samsung cameras,

and got Igor Skochinsky nerd-sniped.

Igor quickly realized it is a variant of

LZSS,

similar to a

reverse-engineered HP firmware.

Together, we went on a three-week journey of puzzles within puzzles. This post is the cleaned up documentation of the first part of that treasure hunt, hoping to inspire and guide other reverse engineers.

Collecting .bin files

To analyze the format it's helpful to obtain as many diverse specimens as

possible. Samsung still offers the latest camera firmware versions:

NX mini 1.10,

NX3000 1.11,

NX3300 1.01.

Older versions can be obtained from the NX Files

archive. The Galaxy K Zoom firmware can be downloaded

from portals like SamFw. The interesting

part is stored in the sparse

ext4 root filesystem as /vendor/firmware/RS_M7MU.bin. With only 6.2MB it's

the smallest specimen, the dedicated camera files are over 100MB each.

The details of the header format were "discovered" back in 2023 by doing a github search for "M7MU", and finding an Exynos interface driver. The driver documents the header format that matches all known specimens.

The header has three interesting parts for further analysis (the values and

hex-dumps in this blog post are all taken from DATANXmini.bin version 1.10;

the header values are little-endian):

- the "writer" (

writer_load_size = 0x4fc00andwrite_code_entry 0x40000400) - the "code" (

code_size = 0xafee12andoffset_code = 0x50000) - the "sections"

The section_info field is an array of 25 integers, the first one looking

like a count, and the following ones like tuples of [number, size] (we can

rule out [number, offset] because the second column is not growing linearly):

section_info = 00000007

00000001 0050e66c

00000002 001a5985

00000003 00000010

00000004 00061d14

00000005 003e89d6

00000006 00000010

00000007 00000010

10x 00000000

Adding up the sizes of all sections gives us 0x958d86 or roughly 9.3MB.

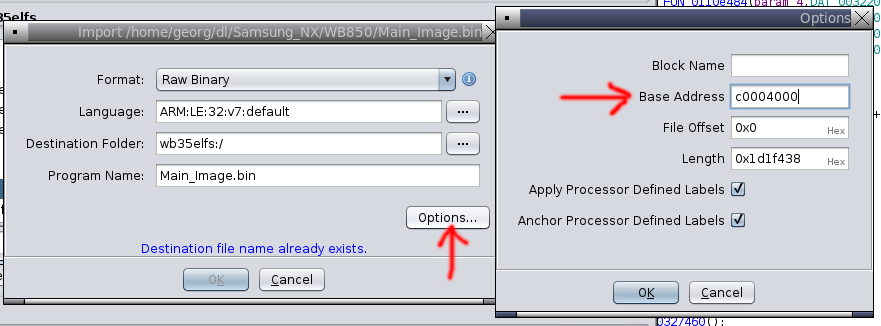

The writer

The writer is an uncompressed 320KB ARM binary module. The load address of

0x40000400 and the header size of 1024 = 0x400 imply that the loader starts

right after the header. A brief analysis indicates code to access a exFAT, FAT

and SDIO. This seems to be the module that does a full copy of the firmware

image from an SD card to internal flash, but without actually uncompressing it.

The writer also seems to end before 0x50000 = 0x400 + 0x4fc00, padded with

47KB of zero bytes:

00044270: 04f0 1fe5 280a 0040 0000 0000 0000 0000 ....(..@........

00044280: 0000 0000 0000 0000 0000 0000 0000 0000 ................

*

00050000: 5a7d 0000 f801 9fe5 0010 90e5 c010 81e3 Z}..............

00050010: 0010 80e5 5004 ec04 1040 0410 100f 11ee ....P....@......

The code

The above hex-dump also shows that something new begins at 0x50000, matching

the offset_code header value. Assuming that it's the code block and that it's

~11MB (code_size = 0xafee12) we can check for its end as well, at 0xb4ee12:

00b4ec10: 0000 0000 0000 0000 0000 0000 0000 0000 ................

*

00b4ee00: 800c 0100 0000 0200 0000 0300 0000 0000 ................

╭────────────────────────────────────────────────────

00b4ee10: ed08│0000 0000 0000 0000 0000 0000 0000 ................

──────────────╯

00b4ee20: 0000 0000 0000 0000 0000 0000 0000 0000 ................

*

00b4f000: 5346 5f52 4553 4f55 5243 4500 0000 0000 SF_RESOURCE.....

This is also a match, there is a bunch of zero-padding within the code block,

and it ends with 0xed 0x08, followed by some more zero-padding after the

code block.

Surprise SF_RESOURCE chunk

The just discovered block at 0xb4f000 looks like some sort of resource

section. Again, it's not directly known to binwalk (but binwalk finds a

number of known signatures within!). Let's investigate how it continues:

00b4f000: 5346 5f52 4553 4f55 5243 4500 0000 0000 SF_RESOURCE.....

00b4f010: 3031 2e30 a300 0000 0000 0000 0000 0000 01.0............

00b4f020: 4e58 4d49 4e49 2e48 4558 0000 0000 0000 NXMINI.HEX......

00b4f030: 0000 0000 0000 0000 0000 0000 0000 0000 ................

00b4f040: 0000 0000 0000 0000 0000 0000 0000 0000 ................

00b4f050: 0000 0000 0000 0000 0000 0000 0c92 0000 ................

00b4f060: 6364 2e69 736f 0000 0000 0000 0000 0000 cd.iso..........

00b4f070: 0000 0000 0000 0000 0000 0000 0000 0000 ................

00b4f080: 0000 0000 0000 0000 0000 0000 0000 0000 ................

00b4f090: 0000 0000 0000 0000 00c0 0000 00f8 0600 ................

00b4f0a0: 4951 5f43 4150 2e42 494e 0000 0000 0000 IQ_CAP.BIN......

00b4f0b0: 0000 0000 0000 0000 0000 0000 0000 0000 ................

00b4f0c0: 0000 0000 0000 0000 0000 0000 0000 0000 ................

00b4f0d0: 0000 0000 0000 0000 00c0 0700 8063 0000 .............c..

<snipped a looong list of file headers>

00b518a0: 6c63 645f 6372 6f73 732e 6a70 6700 0000 lcd_cross.jpg...

00b518b0: 0000 0000 0000 0000 0000 0000 0000 0000 ................

00b518c0: 0000 0000 0000 0000 0000 0000 0000 0000 ................

00b518d0: 0000 0000 0000 0000 00c0 5507 6d3d 0100 ..........U.m=..

<end of the file headers? the following is not a filename>

00b518e0: 2001 0481 0000 005a 0000 7ff8 0000 014d ......Z.......M

00b518f0: 0000 015b 0000 015d 0000 0000 0000 0000 ...[...]........

We have an obvious magic string (SF_RESOURCE), followed by a slightly weird

version string ("01.0"), an 0xa3 byte and some zeroes to align to the next

32 bytes.

Then comes what looks like a file system with "NXMINI.HEX", "cd.iso", "IQ_CAP.BIN" etc...

Each file seems to have a 64-byte header, starting with the filename and

ending with some numbers. The first filename is at 0xb4f020, the first

non-filename is at 0xb518e0, giving us (0xb518e0 - 0xb4f020)/64 = 163 =

0xa3 files, and confirming that the header contains the number of files in

the resource section. Given that the header numbers are little-endian, the

number of files is probably not just one byte, but maybe two or four.

The numbers in each file header seem to be two little-endian integers, with

the first one growing linearly (0x0, 0xc000, 0x7c000, ... 0x755c000),

and the second one varying (0x920c, 0x6f800, 0x6380, ... 0x13d6d).

From that we can assume that the first number is the offset of the file,

relative to the end of the file headers (first one is 0), and the second value is most probably

the respective size. We can transfer this knowledge into a tool to print and

dump the resouce section,

sfresource.py:

| Filename | Offset | Size | Filename | Offset | Size | |

|---|---|---|---|---|---|---|

| NXMINI.HEX | 0x00000000 |

37388 | cd.iso | 0x0000c000 |

456704 | |

| IQ_CAP.BIN | 0x0007c000 |

25472 | IQ_COMM.BIN | 0x00084000 |

60 | |

| IQ_M_FHD.BIN | 0x00088000 |

24784 | IQ_M_HD.BIN | 0x00090000 |

24784 | |

| IQ_M_SD.BIN | 0x00098000 |

26096 | IQ_V_FHD.BIN | 0x000a0000 |

24784 | |

| IQ_V_HD.BIN | 0x000a8000 |

24784 | IQ_V_SD.BIN | 0x000b0000 |

26096 | |

| cac_par1.BIN | 0x000b8000 |

4896 | cac_par2.BIN | 0x000bc000 |

4980 | |

| cac_par3.BIN | 0x000c0000 |

4980 | cac_par4.BIN | 0x000c4000 |

4980 | |

| cac_par5.BIN | 0x000c8000 |

4980 | COMMON.BIN | 0x000cc000 |

58 | |

| CAPTURE.BIN | 0x000d0000 |

57004 | dt_bg.jpg | 0x000e0000 |

117219 | |

| file_ng.jpg | 0x00100000 |

16356 | logo.bin | 0x00104000 |

307200 | |

| wifi_bg.yuv | 0x00150000 |

691200 | mplay_bg.jpg | 0x001fc000 |

177465 | |

| PRD_CMD.XML | 0x00228000 |

15528 | res.dat | 0x0022c000 |

85463552 | |

| Hdmi_res.dat | 0x053b0000 |

4492288 | Hdmi_f_res.dat | 0x057fc000 |

3332608 | |

| pa_1.jpg | 0x05b2c000 |

548076 | pa_1p.jpg | 0x05bb4000 |

113106 | |

| pa_2.jpg | 0x05bd0000 |

275490 | pa_2p.jpg | 0x05c14000 |

51899 | |

| pa_3.jpg | 0x05c24000 |

283604 | pa_3p.jpg | 0x05c6c000 |

73704 | |

| pa_4.jpg | 0x05c80000 |

308318 | pa_4p.jpg | 0x05ccc000 |

85517 | |

| pa_5.jpg | 0x05ce4000 |

151367 | pa_5p.jpg | 0x05d0c000 |

37452 | |

| pa_6.jpg | 0x05d18000 |

652185 | pa_6p.jpg | 0x05db8000 |

101948 | |

| pa_7.jpg | 0x05dd4000 |

888479 | pa_7p.jpg | 0x05eb0000 |

152815 | |

| Cross.raw | 0x05ed8000 |

617100 | Fisheye2.jpg | 0x05f70000 |

114174 | |

| Fisheye1.raw | 0x05f8c000 |

460800 | Fisheye3.bin | 0x06000000 |

1200 | |

| HTone3.raw | 0x06004000 |

76800 | HTone5.raw | 0x06018000 |

76800 | |

| HTone10.raw | 0x0602c000 |

76800 | Min320.raw | 0x06040000 |

19200 | |

| Min640.raw | 0x06048000 |

38400 | Min460.raw | 0x06054000 |

63838 | |

| Movie_C1.jpg | 0x06064000 |

401749 | Movie_C2.jpg | 0x060c8000 |

277949 | |

| Movie_C3.jpg | 0x0610c000 |

311879 | Movie_V1.raw | 0x0615c000 |

307200 | |

| Movie_V2.raw | 0x061a8000 |

307200 | Movie_V3.raw | 0x061f4000 |

307200 | |

| Movie_R0.raw | 0x06240000 |

1555200 | Movie_R1.raw | 0x063bc000 |

388800 | |

| Sketch0.raw | 0x0641c000 |

1443840 | Sketch1.raw | 0x06580000 |

322560 | |

| VignetC.jpg | 0x065d0000 |

191440 | VignetV.raw | 0x06600000 |

460800 | |

| VignetV_PC.raw | 0x06674000 |

614400 | FD_RSC1 | 0x0670c000 |

1781664 | |

| BD_RSC1 | 0x068c0000 |

28188 | ED_RSC1 | 0x068c8000 |

323628 | |

| SD_RSC1 | 0x06918000 |

270508 | OLDFILM1.JPG | 0x0695c000 |

154647 | |

| OLDFILM2.JPG | 0x06984000 |

158531 | OLDFILM3.JPG | 0x069ac000 |

166034 | |

| OLDFILM4.JPG | 0x069d8000 |

170281 | OLDFILM5.JPG | 0x06a04000 |

169271 | |

| BS_POW1.wav | 0x06a30000 |

104060 | BS_POW2.wav | 0x06a4c000 |

109046 | |

| BS_POW3.wav | 0x06a68000 |

94412 | BS_MOVE.wav | 0x06a80000 |

4678 | |

| BS_MOVE2.wav | 0x06a84000 |

5032 | BS_MENU.wav | 0x06a88000 |

25340 | |

| BS_SEL.wav | 0x06a90000 |

3964 | BS_OK.wav | 0x06a94000 |

3484 | |

| BS_TOUCH.wav | 0x06a98000 |

5340 | BS_DEPTH.wav | 0x06a9c000 |

4904 | |

| BS_CANCL.wav | 0x06aa0000 |

17708 | BS_NOBAT.wav | 0x06aa8000 |

194228 | |

| BS_NOKEY.wav | 0x06ad8000 |

13308 | BS_INFO.wav | 0x06adc000 |

12168 | |

| BS_WARN.wav | 0x06ae0000 |

20768 | BS_CONN.wav | 0x06ae8000 |

88888 | |

| BS_UNCON.wav | 0x06b00000 |

44328 | BS_REC1.wav | 0x06b0c000 |

26632 | |

| BS_REC2.wav | 0x06b14000 |

47768 | BS_AF_OK.wav | 0x06b20000 |

10612 | |

| BS_SHT_SHORT.wav | 0x06b24000 |

4362 | BS_SHT_SHORT_5count.wav | 0x06b28000 |

35870 | |

| BS_SHT_SHORT_30ms.wav | 0x06b34000 |

1978 | BS_SHT_Conti_Normal.wav | 0x06b38000 |

44236 | |

| BS_SHT_Conti_6fps.wav | 0x06b44000 |

22904 | BS_SHT1.wav | 0x06b4c000 |

63532 | |

| BS_SHT_Burst_10fps.wav | 0x06b5c000 |

552344 | BS_SHT_Burst_15fps.wav | 0x06be4000 |

375752 | |

| BS_SHT_Burst_30fps.wav | 0x06c40000 |

257624 | BS_COUNT.wav | 0x06c80000 |

2480 | |

| BS_2SEC.wav | 0x06c84000 |

87500 | BS_SHT_LONG_OPEN.wav | 0x06c9c000 |

16000 | |

| BS_SHT_LONG_CLOSE.wav | 0x06ca0000 |

15992 | BS_MC1.wav | 0x06ca4000 |

13944 | |

| BS_FACE1.wav | 0x06ca8000 |

36428 | BS_FACE2.wav | 0x06cb4000 |

36048 | |

| BS_FACE3.wav | 0x06cc0000 |

3428 | BS_JINGLE.wav | 0x06cc4000 |

218468 | |

| BS_MEW.wav | 0x06cfc000 |

208920 | BS_DRIPPING.wav | 0x06d30000 |

102244 | |

| BS_TIMER.wav | 0x06d4c000 |

30188 | BS_TIMER_2SEC.wav | 0x06d54000 |

381484 | |

| BS_TIMER_3SEC.wav | 0x06db4000 |

278956 | BS_ROTATION.wav | 0x06dfc000 |

5316 | |

| BS_NFC_START.wav | 0x06e00000 |

124714 | BS_TEST.wav | 0x06e20000 |

24222 | |

| im_10_1m.bin | 0x06e28000 |

123154 | im_13_3m.bin | 0x06e48000 |

134802 | |

| im_16_9m.bin | 0x06e6c000 |

191378 | im_1_1m.bin | 0x06e9c000 |

10578 | |

| im_20m.bin | 0x06ea0000 |

238290 | im_2m.bin | 0x06edc000 |

27218 | |

| im_2_1m.bin | 0x06ee4000 |

23570 | im_4m.bin | 0x06eec000 |

40338 | |

| im_4_9m.bin | 0x06ef8000 |

57618 | im_5m.bin | 0x06f08000 |

65682 | |

| im_5_9m.bin | 0x06f1c000 |

76114 | im_7m.bin | 0x06f30000 |

69906 | |

| im_7_8m.bin | 0x06f44000 |

92178 | im_vga.bin | 0x06f5c000 |

3474 | |

| set_bg.jpg | 0x06f60000 |

13308 | DV_DSC.jpg | 0x06f64000 |

18605 | |

| DV_DSC.png | 0x06f6c000 |

2038 | DV_DSC_S.jpg | 0x06f70000 |

12952 | |

| DV_DSC_S.png | 0x06f74000 |

740 | DEV_NO.jpg | 0x06f78000 |

29151 | |

| wifi_00.bin | 0x06f80000 |

13583 | wifi_01.bin | 0x06f84000 |

66469 | |

| wifi_02.bin | 0x06f98000 |

87936 | wifi_03.bin | 0x06fb0000 |

63048 | |

| wifi_04.bin | 0x06fc0000 |

113645 | wifi_05.bin | 0x06fdc000 |

172 | |

| wifi_06.bin | 0x06fe0000 |

12689 | wifi_07.bin | 0x06fe4000 |

12750 | |

| wifi_08.bin | 0x06fe8000 |

3933 | cNXMINI.bin | 0x06fec000 |

2048 | |

| net_bg0.jpg | 0x06ff0000 |

7408 | net_bg2.jpg | 0x06ff4000 |

7409 | |

| net_bg3.jpg | 0x06ff8000 |

7409 | qwty_bg.jpg | 0x06ffc000 |

10953 | |

| net_bg0.yuv | 0x07000000 |

691200 | net_bg2.yuv | 0x070ac000 |

691250 | |

| net_bg3.yuv | 0x07158000 |

691208 | qwty_bg.yuv | 0x07204000 |

691200 | |

| ChsSysDic.dic | 0x072b0000 |

1478464 | ChsUserDic.dic | 0x0741c000 |

31744 | |

| ChtSysDic.dic | 0x07424000 |

1163484 | ChtUserDic.dic | 0x07544000 |

31744 | |

| lcd_grad_cir.jpg | 0x0754c000 |

26484 | lcd_grad_hori.jpg | 0x07554000 |

32586 | |

| lcd_cross.jpg | 0x0755c000 |

81261 |

The JPEG files are backgrounds and artistic effects, the WAV files are shutter,

timer and power-on/off effects. cd.iso is the i-Launcher install CD that

the camera emulates over USB. PRD_CMD.XML is a structured list of

"Production Mode System Functions":

<!--Production Mode System Functions-->

<pm_system>

<!------------Key Command-------------->

<key cmd_id="0x1">

<s1 index_id="0x1">s1</s1>

<s2 index_id="0x2">s2</s2>

<menu index_id="0x3">menu</menu>

...

<ft_mode index_id="0x11">ft_mode</ft_mode>

<ok_ng index_id="0x12">ok_ng</ok_ng>

</key>

<!------------Touch Command-------------->

<touch cmd_id="0x2">

<mask index_id="0x1">mask</mask>

<unmask index_id="0x2">unmask</unmask>

</touch>

...

</pm_system>

The last file ends at 0xb518e0 + 0x755c000 + 81261 = 0x80c164d - can we find

more surprise sections after that?

╭─────────────────────────

080c1640: 1450 0145 0014 5001 4500 7fff d9│00 0000 .P.E..P.E.......

──────────────────────────────────────────╯

080c1650: 0000 0000 0000 0000 0000 0000 0000 0000 ................

*

080c18e0: c075 12cf e018 0c08 fc00 0000 0000 0000 .u..............

080c18f0: 0000 0000 ....

<EOF>

There is some more padding and an unknown 9-byte value. It might be a checksum, verification code or similar. We can probably ignore that for now.

The SF_RESOURCE chunk without this unknown "checksum" is 0x80c164d -

0xb4f000 bytes, or ~117MB.

The code sections

The section_info variable was outlining some sort of partitioning. So far

we have found the writer (320KB), the code block (11MB) and the SF_RESOURCE

chunk (117MB) in the .bin file. There is no space in the .bin to fit

another 9.3MB, unless it is within one of the already-identified parts.

Given that the "code" part is 11MB and the sections are 9.3MB, they might

actually fit into the code part. Let's see what is at offset_code +

section[1].size = 0x50000 + 0x50e66c = 0x55e66c:

╭───────────────────────────

0055e660: 58b7 33e1 1f00 8000 0000 0000│0000 0000 X.3.............

───────────────────────────────────────╯

0055e670: 0000 0000 0000 0000 0000 0000 0000 0000 ................

*

0055e800: 6ab3 0000 70b4 022b 08bf 5200 002a 4ff0 j...p..+..R..*O.

Okay, there is actual data and some zeroes, then 404 zero bytes until some

more data comes. Apparently those 404 bytes are padding the first section to

some alignment boundary - maybe it's block_size = 0x400 from the header?

At 0x55e800 + section[2].size = 0x704185 there is a similar picture of

trailing zeroes within the expected section, followed by zero padding:

╭─────────────────────────────────────────────

00704180: 0100 0000 00│00 0000 0000 0000 0000 0000 ................

──────────────────────╯

00704190: 0000 0000 0000 0000 0000 0000 0000 0000 ................

*

00704200: 800c 7047 0000 0300 0000 0000 0000 0000 ..pG............

────────────────────────────────────────────────────────────────────

00704210: 0000 0000 0000 0000 0000 0000 0000 0000 ................

*

00704400: 4efb 0001 10b5 7648 a1f6 20de 75a0 a1f6 N.....vH.. .u...

Hovever, 0x704200 is not divisible by 0x400, so we need to correct our

assumptions on the section alignment. Section #3 at 0x704200 is only 0x10 = 16 bytes,

and is followed by the next section at 0x704400, giving us an effective

alignment of 0x200 bytes.

In total, we end up with seven sections as follows, and we can

extend

m7mu.py

with the -x argument to extract all partitions (even including the writer

and the resources):

| Offset | Size | Section |

|---|---|---|

0x050000 |

5301868 | chunk-01.bin |

0x55e800 |

1726853 | chunk-02.bin |

0x704200 |

16 | chunk-03.bin |

0x704400 |

400660 | chunk-04.bin |

0x766200 |

4098518 | chunk-05.bin |

0xb4ec00 |

16 | chunk-06.bin |

0xb4ee00 |

16 | chunk-07.bin |

This is continued in part 2: reverse-engineering the LZSS compression, where we find out how the compression of the seven chunks works.

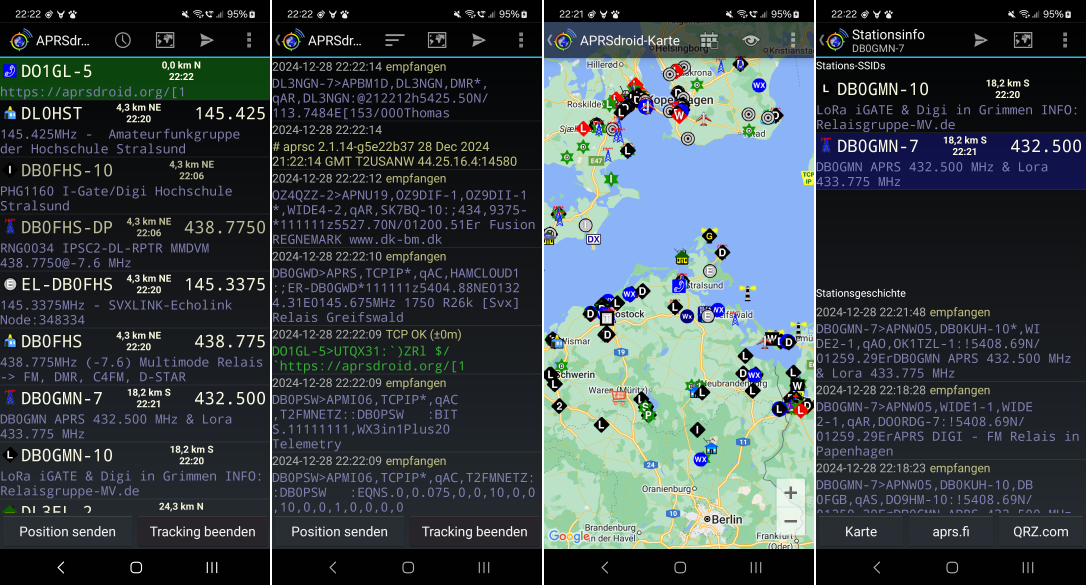

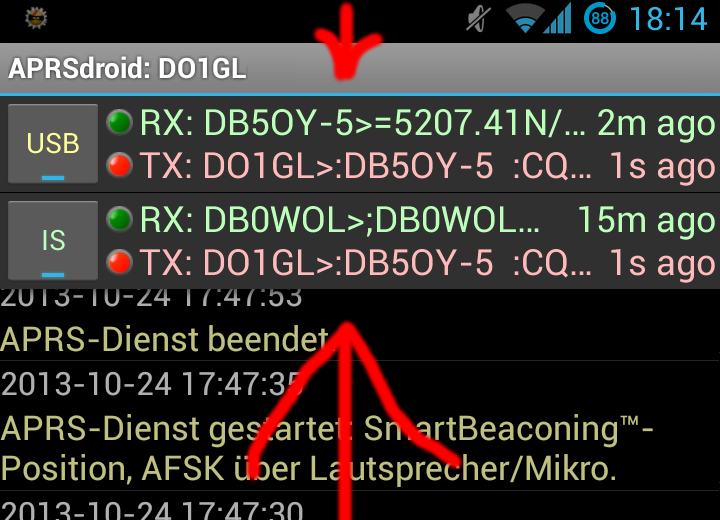

APRSdroid is an Amateur Radio geo-location (APRS) app for Android licensed under the GPL. It started as a Scala learning experience at New Year's eve 2009.

This post is a review of 15 years of the project and related developments in the ham radio world. There is also the two-year recap of the app and the Scala on Android experience that I wrote in 2011.

The evolution of APRSdroid

In 2009, when I started developing the app, the HTC Dream (a.k.a. "T-Mobile G1") was still the go-to Android phone, Android 2.0 ("Eclair") was just released with the Motorola Milestone/Droid featuring the new OS, and Google Play was a one-year old thing called Android Market.

User interface changes

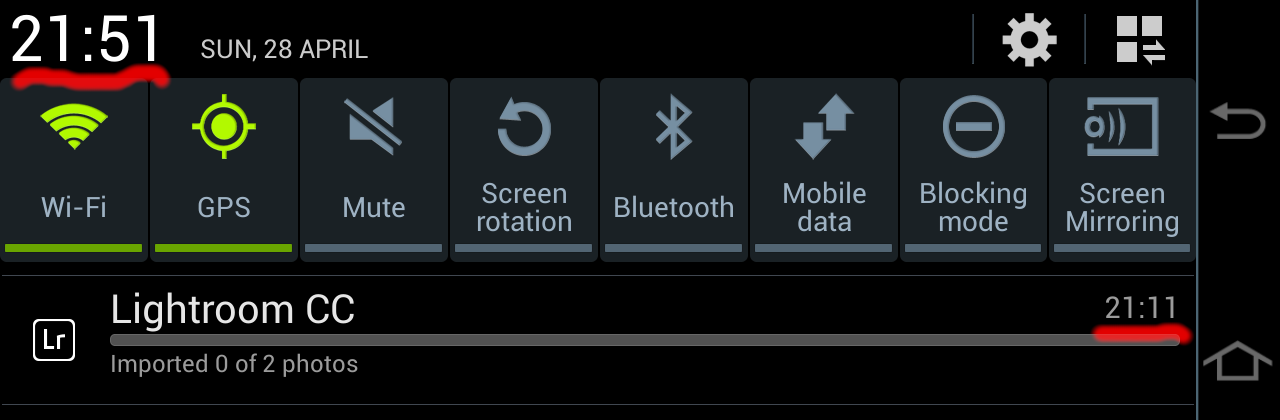

When APRSdroid became ready for public release with version 1.0 (April 2011), its basic user interface consisted of four different views:

- Hub: a list of stations, sorted by distance, with the most important info like calling frequency

- Log: the full log of incoming and outgoing packets as well as internal info

- Map: a map view with stations and their movement

- Station Info: data about a single station and its published objects

While Android has gone through eighteen(!) major releases, two major face-lifts (3.0 Honeycomb added "Holo" UI and tablet support with fragments, 5.0 Lollipop replaced Holo with "Material" design), innumerable changes to the UI widgets and system menus, got rid of QWERTY and then of most other physical buttons, APRSdroid largely remained the same all this time:

Version 1.1 (September 2011) added APRS messaging support, allowing to send text messages to other near-by users. This feature came with a chat window and a conversations window.

Initially, the app was using the original APRS symbols, a set of hand-drawn 16x16 pixel pictures depicting different types of APRS stations. With the increasing display densities, those became impractical, and Heikki Hannikainen OH7LZB created a new, vectorized symbol set. These were included in APRSdroid in version 1.4 (July 2017).

Other than that, minor usability helpers were added over the years, like the support for d-pad and ⏪ ⏩ keys on the map view, to better support the FireTV.

The benefit of the conservative approach is that the app will still support Android 4.0 devices (released in 2011). While nobody should use an Android 4.x as their primary device today, there is still a (vocal) minority of APRS users that want to run the app on an old Chinese tablet or their previous smartphone.

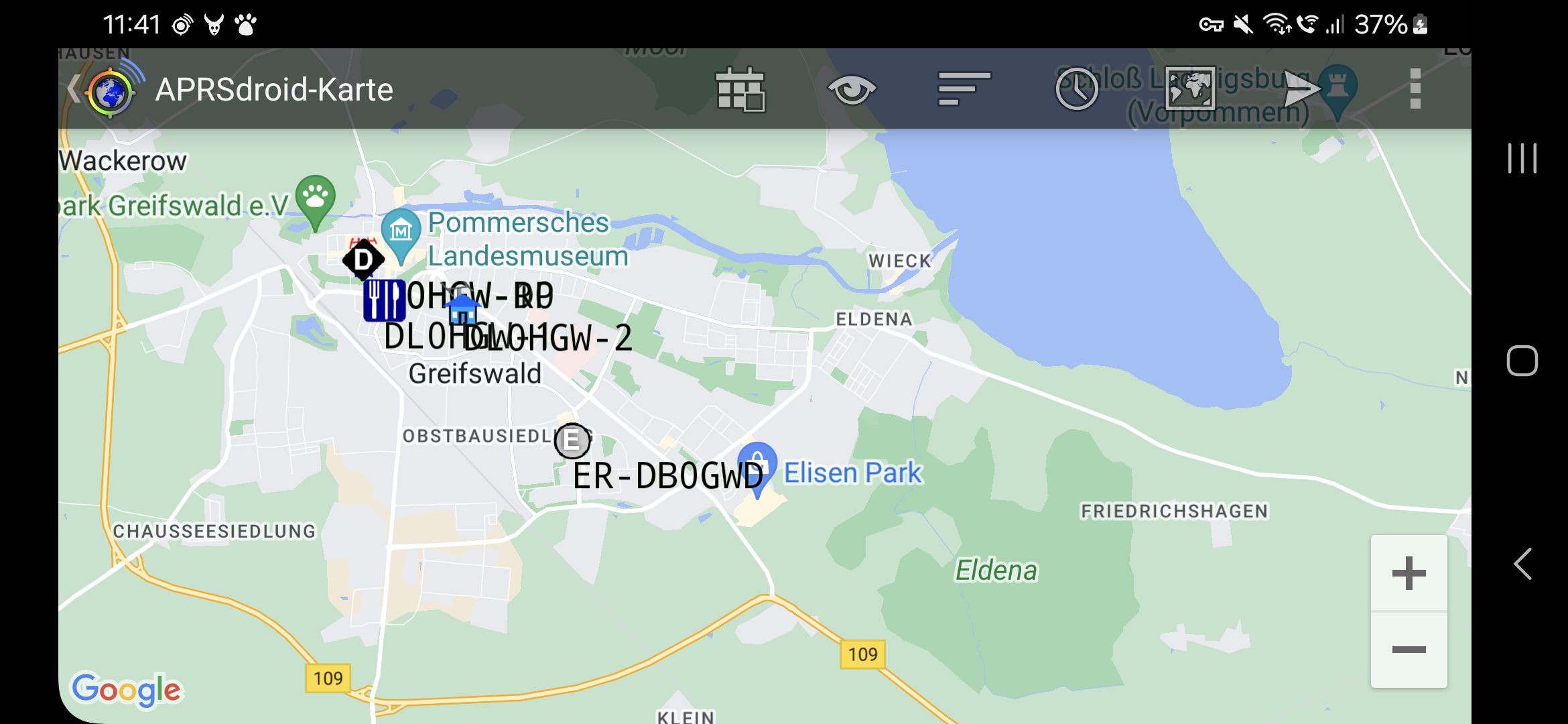

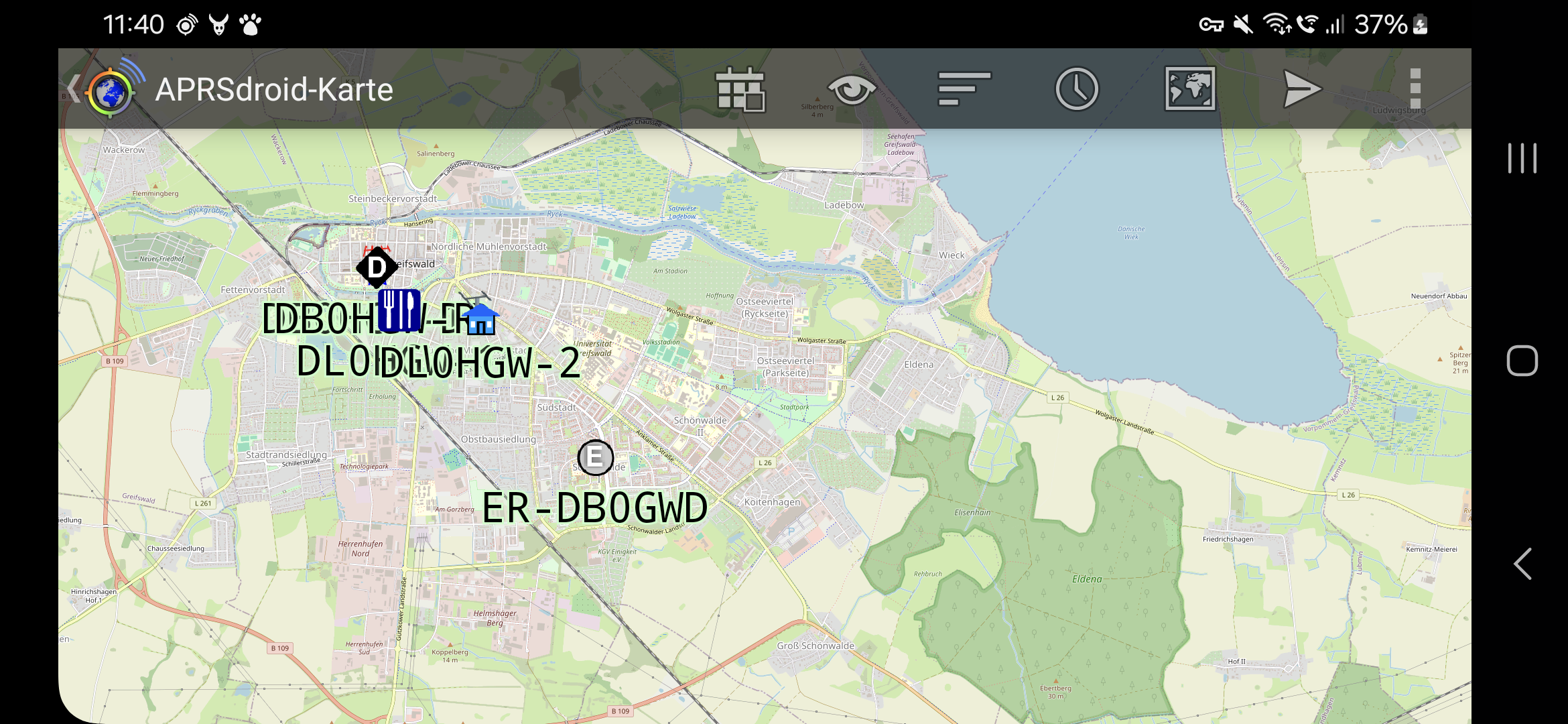

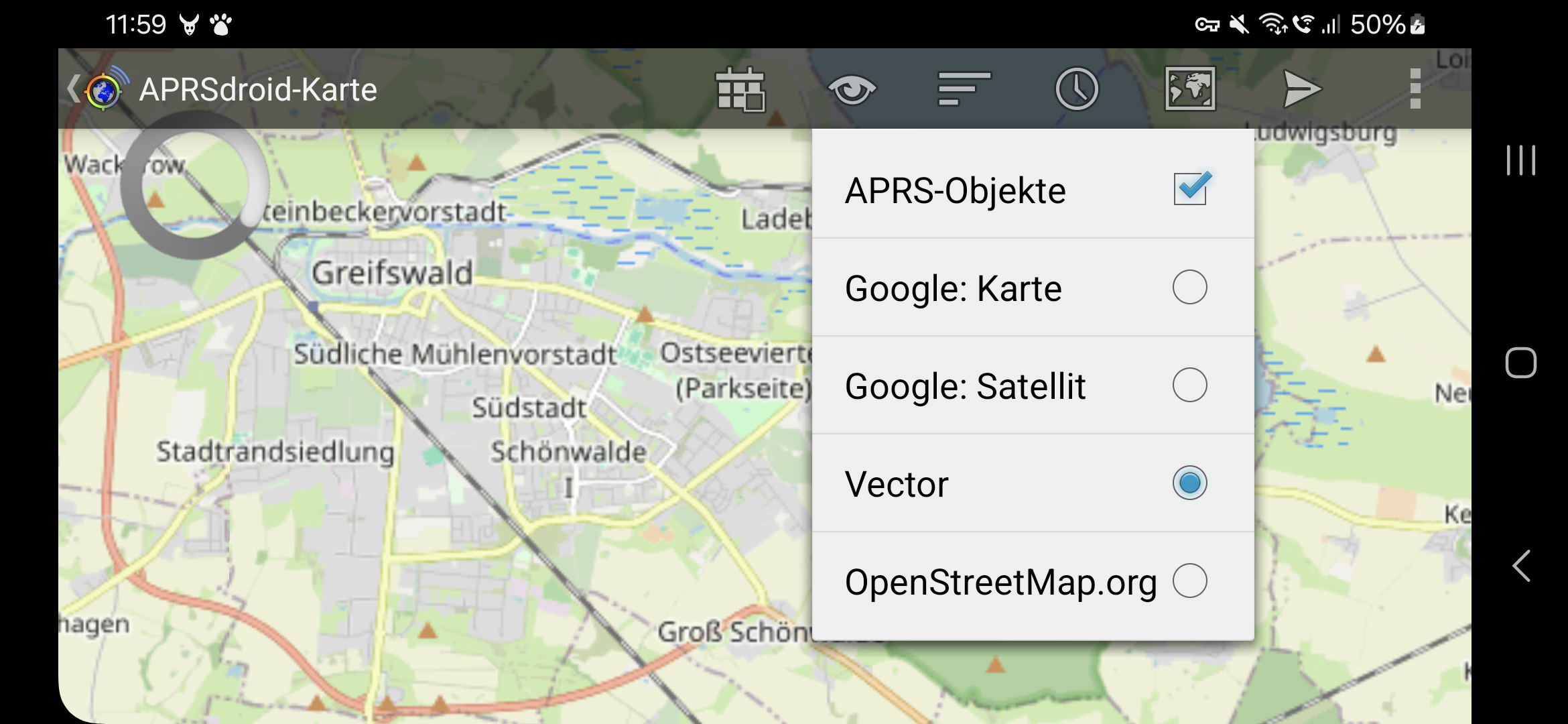

OpenStreetMaps and offline maps

Google Maps was the first (and only, for a long time) map renderer usable in Android apps. Many APRSdroid users wanted to run the app off-grid, requiring support for offline maps. There was no way to implement that with the Google API, so an alternative map rendering library had to be found.

Luckily, back in 2011, the MapsForge library seemingly appeared out of nowhere, providing an offline map renderer and tile-server support. Rendering maps is a huge task, and we take it for granted easily, but significant effort was made to make it possible and to provide it for free.

MapsForge was also used by the c:geo app, providing helpful usage examples.

The first APRSdroid offline map implementation started in 2011 and was maintained as an alternative build that required side-loading the APK and downloading the map file from one of a number of mapsforge build severs. It was also the one used on the Amazon app store, because Amazon devices aren't allowed to use Google Play services (which include the map rendering).

The separate build was only merged with the mainline build in 2019, including a live detection of whether Google Maps is present on the device.

The "classic" mapsforge renderer is a bit outdated, doesn't support hi-dpi

screens, making the map labels barely readable, and requires direct File

access to the map files, which is prohibited on modern Android releases.

It will be replaced in the near future by the Vector Tile Map (VTM) OpenGL renderer which is more perfomant and more flexible.

Personal X.509 certificates

The American Radio Relay League (ARRL) is operating a Public Key Infrastructure (PKI) for radio amateurs and issues X.509 client certificates after verifying the amateur's license. The certificate contains the following fields:

- CN (Common Name): person name of the amateur

- EMAIL: a veriifed email address

- CALLSIGN (OID.1.3.6.1.4.1.12348.1.1): the amateur radio callsign

This is an excellent way to authenticate amateurs over the Internet, except that browsers have messed up the user interface for certificate authentication so badly that nobody is touching it with a ten-foot pole.

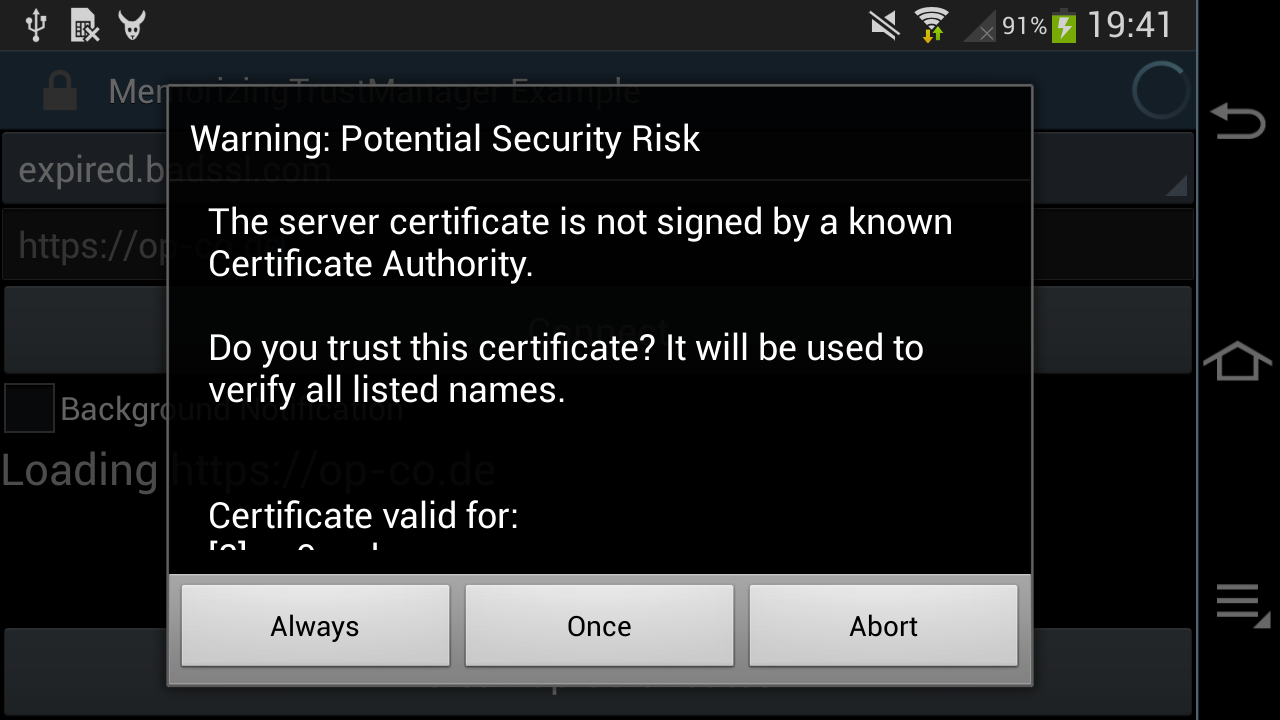

However, the UI issues can be solved more elegantly in an app. Therefore, in 2013, the APRS Tier2 network and APRSdroid implemented experimental SSL client authentication.

The feature works by loading a .p12 certificate file for your callsign into

the app, and then it will automatically try to use TLS when connecting.

Given that amateur radio requires clear-text communication, this is one of the

very few legitimate use-cases for the NULL cipher in TLS.

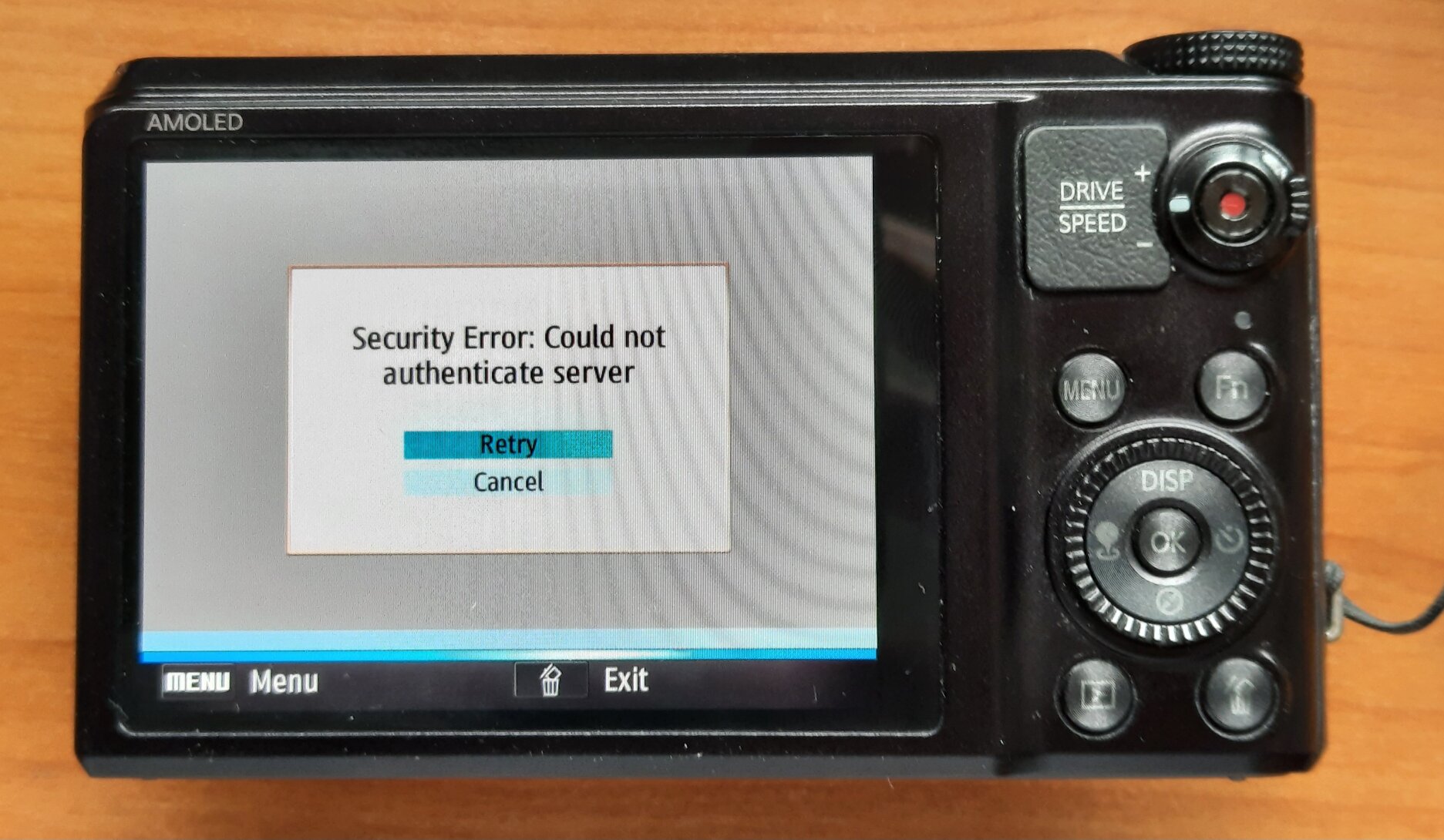

Unfortunately, running TLS on the server side also requires an operational PKI, and that was never completed. Eventually, the certificate validation started failing when the respective chains of trust expired.

Radio connection support

The first versions of the app only supported APRS-IS connections over the Internet, not actually sending and receiving packets over a locally connected radio. However, support for more and more radio connections got added over the years.

Audio-cable AFSK

Version 0.8 (October 2010) added AFSK encoding support using jsoundmodem, allowing to connect an audio cable from the phone to a radio with voice activation, and the app would play the 1200 bps signal over the headphones, triggering a radio transmission.

With version 1.2 (February 2012), the app also integrated AFSK decoding

by means of the PacketDroid

java-native wrapper around multimon. The native code required to summon

the Android NKD during the build process,

but at the time, the Dalvik

runtime on Android provided only minimal

JIT optimizations

and thus Java code wasn't fast enough to perform the required math on 11'025

samples per second on most smartphone CPUs.

A few months later, I was approached by Sivan Toledo 4X6IZ, a researcher who published "A High-Performance Sound-Card AX.25 Modem", an optimized AFSK demodulator written in Java. Together, we integrated it into APRSdroid, and it became the optional "High-Quality Demodulator" that requred an 800MHz CPU. That speed requirement was obsoleted by the switch to the ART runtime in Android 5. The new demodulator became part of version 1.2.2 (November 2012).

The audio modulation using the phone's soundcard never was expected to be a robust feature, given that:

- the Android audio stack isn't fully real-time (so that minor distortions can corrupt a transmission)

- accidental notification sounds or ringing would be directly transmitted over radio

- a cable also carries a part of the RF signal from the transmitting radio, which can crash the Android phone

However, due to the availability of cheap DIY cables and inexpensive Chinese radios, it ended up as the users' favorite.

Bluetooth TNC

A more robust (and electrically decoupled) mechanism was to use Bluetooth SPP serial port emulation to connect to a TNC. At the time, cheap stand-alone Bluetooth serial adapters were flooding the market, and it was rather easy to use one to give new life to an old TNC, or to link the app to a radio with integrated TNC, like the Kenwood D7x0 series. This support was added in version 1.1 (September 2011).

Having a dedicated TNC and radio was not very practical for mobile use, and prohibitive for portable operation. On the other hand, the Bluetooth controller boards turned out to have enough power to actually run the AFSK modulation and demodulation, and so single-board Bluetooth TNCs started to appear. In 2013, Rob Riggs WX9O went a step further and commercially released the Mobilinkd TNC with an integrated battery, allowing to strap the TNC to a handheld radio.

Kenwood GPS emulation

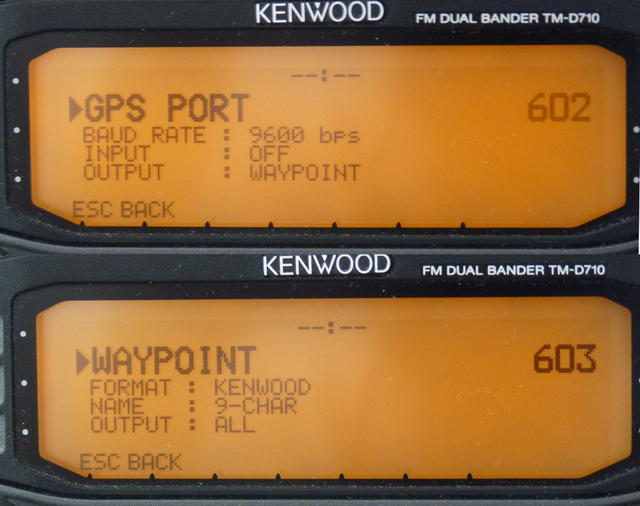

The quite common Kenwood D7x0 radios came with full APRS support, but did not feature a built-in GPS module. Instead, the GPS had to be connected over a serial port. It was possible to also export APRS station information over this port, a feature meant for some GPS units with a display.

Given that Android phones usually have GPS and a display, version 1.2.3 (August 2013) also introduced a Kenwood GPS mode.

The app would forward the GPS NMEA traffic from the phone's receiver over Bluetooth SPP, and would receive and show the APRS stations decoded by the Kenwood radio.

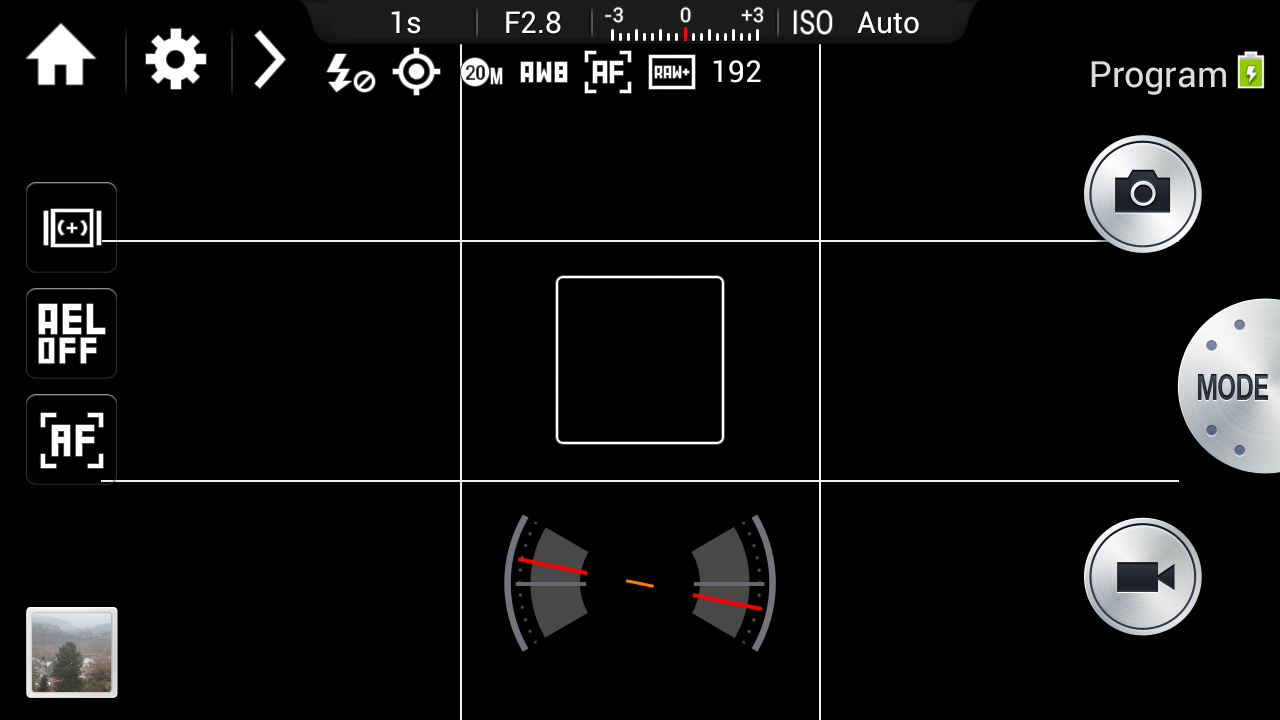

USB serial support

Android 3.1 introduced USB host support in 2011. However, it was a generic low-level interface that required actually re-writing the low-level protocol drivers in Java. It took until 2014, when Felipe Herranz created the open-source UsbSerial library that implemented this low-level support for different USB serial chipsets.

In 2015, this library was experimentally added to APRSdroid beta builds. The new addition also required a refactoring to decouple the on-the-wire protocol (KISS or TNC2 for TNCs, Kenwood GPS, APRS-IS) from the connection method (USB serial, Bluetooth SPP serial, TCP/IP, UDP). This significantly increased the flexibility of APRSdroid and was officially introduced in version 1.4 (July 2017).

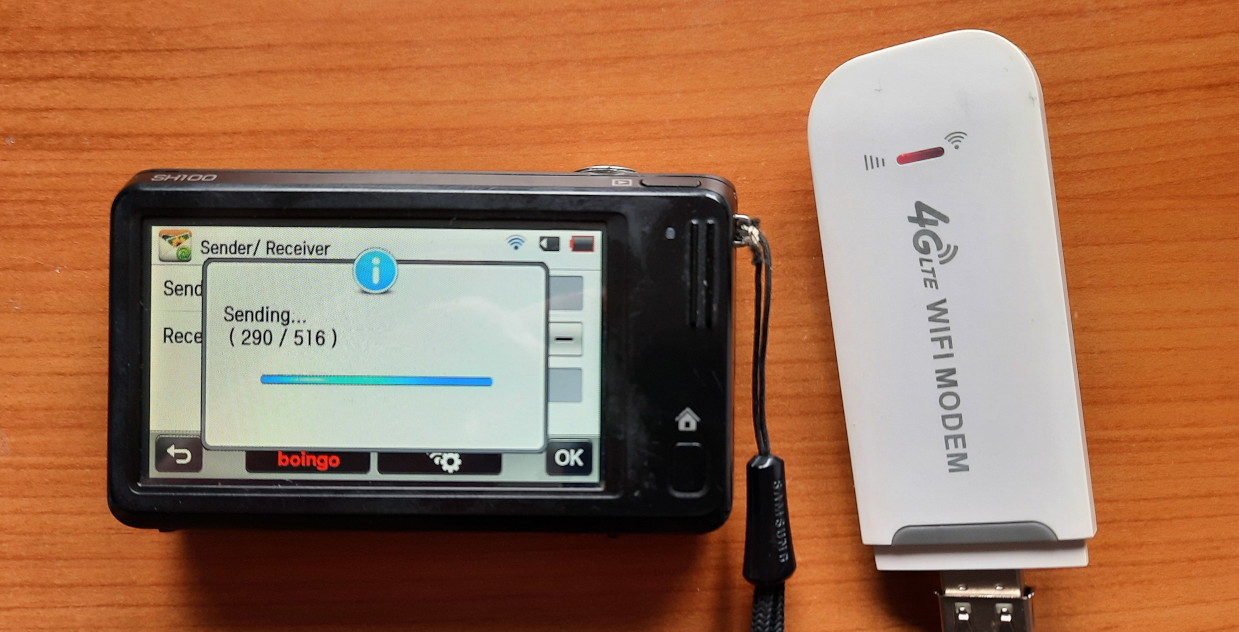

This not only allowed connecting to radios that have a USB port, like the Kenwood TH-D72:

It also allowed to pair APRSdroid with PicoAPRS, the world's smallest integrated APRS transceiver created by Taner Schenker DB1NTO!

Source code activity

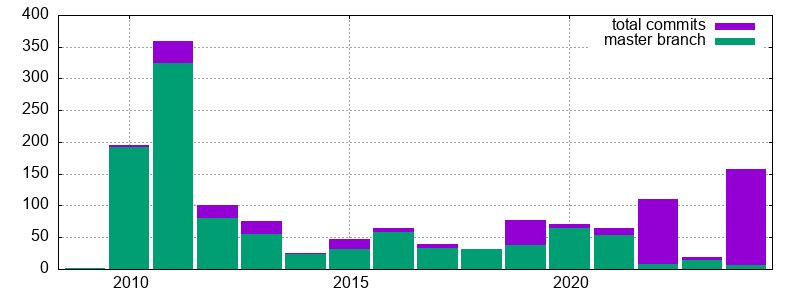

The whole project history, starting with the first commit on December 31st, 2009, is public.

There were years with significant activity, as well as calmer ones. In the last two years, the app development has stalled a bit, basically only doing the required chores to keep up with the tightening Google Play requirements:

The two violet spikes in 2022 and 2024 are contributions that haven't been reviewed or merged yet. In 2022, Loren M. Lang K7IW did some major work on CI integration, build system standardization, and UI test cases. This year, Michael A Phelps NA7Q added some interesting features that have been requested by the community, which are currently being prepared for integration.

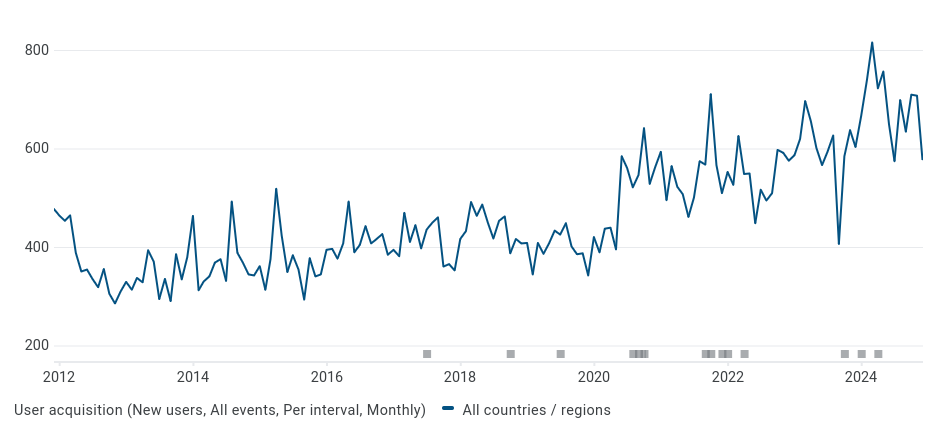

At the same time, the app's popularity is still growing, despite my early fears that the market for Android-using radio amateurs would be small and get saturated in the first year or two:

Scala and the Android build system

In the early days of Android, Ant was the default

build system for apps. Building APRSdroid required

a few custom rules

to inject the Maps API key into the map view, to run scalac from

the project's tools directory, and to do a non-obfuscating

ProGuard run to optimize away most

of the Scala runtime library that would otherwise exceed the 64K classes limit

of Dalvik.

From Ant to Gradle

When Android switched to gradle and Android Studio in 2014, there was no trivial path to integrate Scala into the new build system, so I postponed the transition.

Eventually, in 2017, the benefits of Android Studio for live debugging

became too big to ignore, and I re-evaluated the situation and found

gradle-android-scala-plugin.

That plugin allowed building Scala source code as part of an Android

application project. With a few custom path settings in build.gradle it was

possible to drop it into an existing project without moving the source files

around. However, it insisted on compiling every file in the source

directory, including VIM swap files:

> Task :compileDebugScala

Pruning sources from previous analysis, due to incompatible CompileSetup.

IO error while decoding /usr/src/aprsdroid/src/.AprsPacket.scala.swp with UTF-8

Please try specifying another one using the -encoding option

one error found

> Task :compileDebugScala FAILED

FAILURE: Build failed with an exception.

That was annoying, but not a show-stopper. The plugin also became unmaintained in 2016 and was limited to gradle 2.x, which Google obsoleted for Android apps. Luckily, a fork by AllBus was still being worked on and kept supporting gradle up to 5.6 and up to Android 13 (r33).

Unfortunately, the build time grew to ~3 minutes on my laptop (mostly blocked

by the single-threaded :transformClassesAndResourcesWithR8ForDebug

optimization pass), and half of the builds went subtly wrong and created an APK

without class files.

Android 14 Support

It looks like the AllBus fork of gradle-android-scala-plugin has arrived at

its final destination as well. The code wasn't updated since 2020, and it

won't work with newer gradle versions.

Also, so far all my attempts to understand the Groovy failed miserably, either because I fell over its "flat learning curve" or because I lacked the patience to understand all the required internals of Gradle.

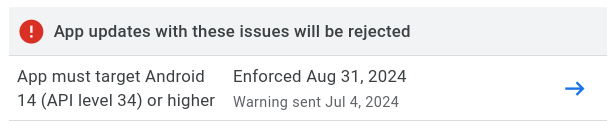

Meanwhile, Google is ruthlessly moving its goal-posts. For one, any new updates published to Google Play must support Android 14 (r34):

In addition, new SDK updates come with a new recursive chain of dependencies on the card house of the build ecosystem. The JDK compatibility level has been raised from 8 over 11 to 17. Trying too old tools yields funny error messages:

Using an older combination of Gradle (7.x) and the Android Gradle Plugin (4.2.0) says the SDK is corrupted (because the old code can't read new class files?):

"Installed Build Tools revision 34.0.0 is corrupted. Remove and install again using the SDK Manager."

Going up to Android plugin 7.0 changes the error to a missing variantConfiguration, which apparently is used by

gradle-android-scala-plugin:No such property: variantConfiguration for class: com.android.build.gradle.internal.variant.TestVariantData

Wow! The

GitHub network graph

shows that there is a new

fork by NCrashed

(from 2023) with Gradle 8.0.x support! It needs to be

installed locally

to

mavenLocal()

and we can bump the JDK to 17, Gradle to 8.0.2, the Android plugin to 8.1.0,

and then... it still doesn't work!

The value for task ':compileDebugJavaWithJavac' property 'destinationDirectory' is final and cannot be changed any further.

The recommendation for the last error is to downgrade Gradle from 6.3 to 6.0.1! 🤡

It looks like gradle-android-scala-plugin was playing with the Java paths to

prevent duplicate compilation,

back in 2014?! This needs to be patched out in some non-obvious way before the

plugin can do its work.

Regardless of this, there is also the Mill build tool with WIP Android support, but building Scala apps for Android isn't on the agenda yet.

One way or another, this will need some more debugging and fiddling in the very near future, to prevent APRSdroid from vanishing from Google Play.

Outlook

The whole Scala building situation has been a major road-block on finding the motivation and patience to work on the app. The thought of rewriting it from scratch in plain Java or Kotlin appeared more than once, and a realistic assessment of the time required for a re-write and for fixing all the new (and old) bugs buried the idea every time... so far.

There are two often asked-for features that have been on the roadmap for a small eternity already.

Bluetooth LE support

In 2019, I started work on Bluetooth Low Energy support. However, the Android Bluetooth LE stack is a prima donna, and mis-treating it in the slightest way will end up in BLE GATT Error 133.

The fun thing about Error 133 is that you don't know which part you touched in the wrong way. Often it's related to calling the BLE stack from another thread than the main thread, but it's not the only potential cause.

While I was able to roll out a BLE-based payment solution for iOS, Android and Linux back in 2015 (which is material for another story), my karma must have left me, and I wasn't able to complete the BLE functionality over Error 133. The branch remained unpublished, and eventually NA7Q made a new attempt at it, that needs to be reviewed and integrated.

Bluetooth LE will not only preserve the battery on newer integrated TNCs like the TNC3, but will also open the app to LoRa-APRS, a mesh network that can be accessed with $20 modems like the LILYGO LoRa32.

IGate functionality

The second important feature request is IGate support. An IGate is an Internet Gateway for forwarding packets received from the radio to APRS-IS, and vice versa.

While APRSdroid supports both Internet and radio connections, it is currently limited to one connection at a time. Properly supporting multiple parallel connections, plus implementing the correct forwarding rules, will require significant refactoring.

User Interface re-design

The interface is still built around single views, and doesn't have the flexibility required on tablets and TV screens. In addition, it would be great to integrate the live status of all TNC connections, like shown in this old mockup:

Website redesign

Finally, the project website, built with hammer and chisel from HTML elements, is neither mobile-friendly, nor does allow to post news items or other structured information. The way to go is probably to convert it to a Hugo static site, which requires re-formatting all existing content and designing an appropriate theme.

This app has been successful thanks to the many projects that it's based on, the people who contributed to it, and its fantastic users.

The future of APRSdroid is set, the tasks are clear and not insurmountable, and the only thing that can delay them is conflicting real-life obligations.

Running a colo / hosted server with Full Disk Encryption (FDE) requires logging in remotely during initramfs, to unlock LUKS. The usual setup tutorials run Dropbear on a different port, to prevent a host key mismatch between OpenSSH and Dropbear, and the scary MitM warning it implies.

However, it's much cleaner and nicer to share the same host key between Dropbear during boot-up and OpenSSH during regular operation.

This recipe shows how to convert the OpenSSH host keys into the Dropbear key

format for Debian's dropbear-initramfs.

Pre-2022 Dropbear

Until dropbear/#136 was fixed in 2022, OpenSSH host keys were not supported, and Ed25519 didn't fully work either.

Regardless of the key type, OpenSSH host keys begin with the following line:

# head -1 /etc/ssh/ssh_host_*_key

==> /etc/ssh/ssh_host_ecdsa_key <==

-----BEGIN OPENSSH PRIVATE KEY-----

==> /etc/ssh/ssh_host_ed25519_key <==

-----BEGIN OPENSSH PRIVATE KEY-----

==> /etc/ssh/ssh_host_rsa_key <==

-----BEGIN OPENSSH PRIVATE KEY-----

You had to convert them to the PEM format, as follows, inplace (DO A BACKUP FIRST!):

ssh-keygen -m PEM -p -f /etc/ssh/ssh_host_ecdsa_key

ssh-keygen -m PEM -p -f /etc/ssh/ssh_host_ed25519_key

ssh-keygen -m PEM -p -f /etc/ssh/ssh_host_rsa_key

The OpenSSH server will happily read PEM format as well, so there should be no problems after that:

# head -1 /etc/ssh/ssh_host_*_key

==> /etc/ssh/ssh_host_ecdsa_key <==

-----BEGIN EC PRIVATE KEY-----

==> /etc/ssh/ssh_host_ed25519_key <==

-----BEGIN OPENSSH PRIVATE KEY-----

==> /etc/ssh/ssh_host_rsa_key <==

-----BEGIN RSA PRIVATE KEY-----

Convert OpenSSH keys for Dropbear

The dropbear-initramfs package depends on dropbear-bin which comes with

the dropbearconvert tool that we need to convert from "openssh" to

"dropbear" key format. Old versions had it in

/usr/lib/dropbear/dropbearconvert but newer one have it in /bin/ - you

might have to update the path accordingly:

dropbearconvert openssh dropbear /etc/ssh/ssh_host_ecdsa_key /etc/dropbear-initramfs/dropbear_ecdsa_host_key

dropbearconvert openssh dropbear /etc/ssh/ssh_host_ed25519_key /etc/dropbear-initramfs/dropbear_ed25519_host_key

dropbearconvert openssh dropbear /etc/ssh/ssh_host_rsa_key /etc/dropbear-initramfs/dropbear_rsa_host_key

That's it. Run update-initramfs (/usr/share/initramfs-tools/hooks/dropbear

will collect the new host keys into the initramfs) and test after the reboot.

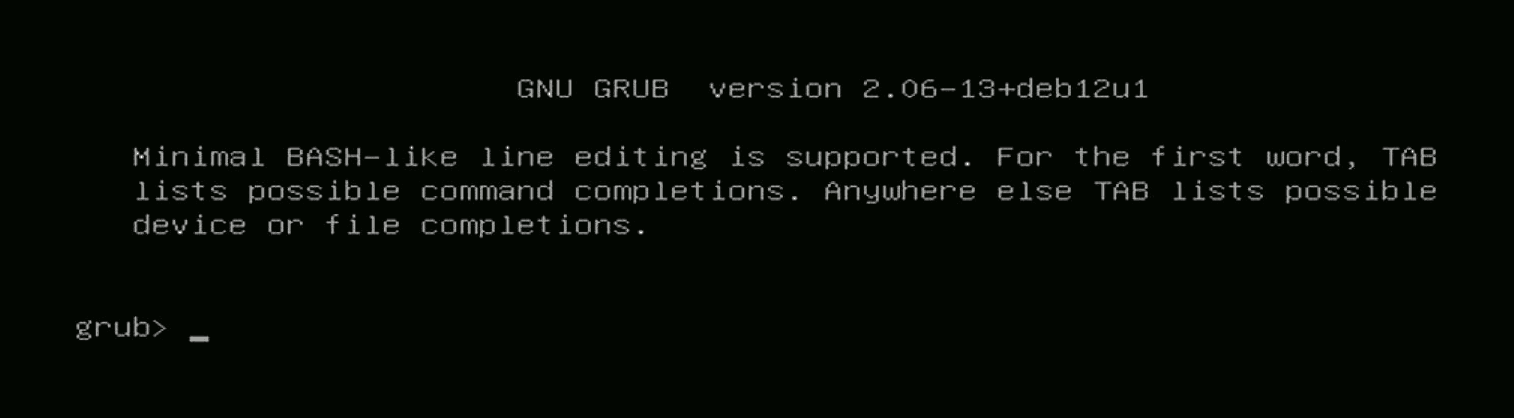

Exactly one year ago, after updating a bunch of Debian packages, my laptop stopped booting Linux. Instead, it briefly showed the GRUB banner, then rebooted into the BIOS setup. On every startup. Reproducibly. Last Friday 13th, I was bitten by this bug again, on a machine running Kali Linux, and had to spend an extra hour at work to fix it.

TL;DR: the GRUB config got extended with a call to fwsetup --is-supported. Older GRUB binaries don't know the parameter and will just reboot into the BIOS setup instead. Oops!

The analysis

Of course, I didn't know the root cause yet, and it took me two hours to isolate

the problem and some more time to identify the root cause. This post documents

the steps of the systematic analyis approach f*cking around and

finding out phase, in the hope that it might help future you and me.

Booting my Debian via UEFI or from the SSD's "legacy" boot sector reproducibly crashed into BIOS setup. Upgrading the BIOS didn't improve the situation.

Starting the Debian 12 recovery worked, however.

Manually typing the linux /boot/vmlinux-something root=UUID=long-hex-number

and initrd /boot/initrd-same-something and boot commands from the Debian 12

GRUB also brought me back into "my" Linux.

Running update-grub and grub-install from there, in order to fix my GRUB, had no positive effect.

The installed GRUB wasn't displaying anything, so I used the recovery to disable gfx mode in GRUB. It still crashed, but there was a brief flash of some text output. Reading it required a camera, as it disappeared after half a second:

bli.mod not found